Failure to follow Data Privacy Compliance requirements can be costly. How can you prepare your business?

GDPR, CCPA and the newly coming CPRA (which goes into effect 1/1/2023) require intense data management, or the cost of non-compliance can rise to $1000 per record. These data privacy laws pertain broadly to personal information of consumers including:

- Account and login information

- GPS and other location data

- Social security numbers

- Health information

- Drivers license and passport information

- Genetic and biometric data

- Mail, email, and text message content

- Personal communications

- Financial account information

- Browsing history, search history, or online behavior

- Information about race, ethnicity, religion, union membership, sex life, or sexual orientation

CPRA requires businesses to inform consumers how long they will retain data (for each category of data) and the criteria used to determine that time period of what is “reasonably necessary.” Basically, you have to be prepared to justify the data collection, processing, and retention and tie it directly to a legitimate business purpose.

Now prohibited is “sharing” data (beyond buying and selling it), which is defined as: sharing, renting, releasing, disclosing, disseminating, making available, transferring or otherwise communicating orally, in writing, or by electronic of other means, a consumer’s personal information by the business to a third party for cross-context behavioral advertising, whether or not for monetary or other valuable consideration, including transactions between a business and a third party for the benefit of a business in which no money is exchanged. Arguably, this covers a business working with a marketing firm and sharing data with the agency about leads or prospects to employ cross-content behavioral advertising in a campaign.

Companies now need to be able to inform the consumer what type of data they have about customers, what business purposes it is used for, and retention periods. This information allows them to meet the expanded consumer rights given by the CPRA, including deleting data, limiting types of use for certain types of data, correcting data errors across the organization in every location where it lives, automating and executing data retention policies, and to be ready for auditing. While it is an ethical ruleset that has now been put in place, with Data Privacy Compliance active, companies now have to consider possible non-compliance costs in addition to operational and opportunity costs as well.

As a result of these requirements, companies are now in need of a data management system with built-in data governance to stay in compliance. These data management platforms must be capable of identifying, on a data field-by-data-field basis, where the data originates, each and every change made to the data, and each downstream user of the data (databases, apps, analytics, queries, extracts, dashboards). Without automated data management and governance, it will be humanly impossible to manually find this information by the deadlines required. You need to be able to automatically replicate changes made to the data to all locations where the data resides throughout your organization to make consumer directed corrections and deletions within the time limits prescribed.

Critical Success Factors for Data Accuracy Platforms

The data accuracy market is currently undergoing a paradigm shift from complex, monolithic, on-premise solutions to nimble, lightweight, cloud-first solutions. As the production of data accelerates, the costs associated with maintaining bad data will grow exponentially and companies will no longer have the luxury of putting data quality concerns on the shelf to be dealt with “tomorrow.”

When analyzing major critical success factors for data accuracy platforms in this rapidly evolving market, four are critically important to evaluate in every buyer’s journey. Simply put, these are: Installation, Business Adoption, Return on Investment and BI and Analytics.

Installation

When executing against corporate data strategies, it is imperative to show measured progress quickly. Complex installations that require cross-functional technical teams and invasive changes to your infrastructure will prevent data governance leaders from demonstrating tangible results within a reasonable time frame. That is why it is critical that next-gen data accuracy platforms be easy to install.

Business Adoption & Use

Many of the data accuracy solutions available on the market today are packed with so many complicated configuration options and features that they require extensive technical training in order to be used properly. When the barrier to adoption and use is so high, showing results fast is nearly impossible. That is why it is critical that data accuracy solutions be easy to adopt and use.

Return on Investment

The ability to demonstrate ROI quickly is a critical enabler for securing executive buy-in and garnering organizational support for an enterprise data governance program. In addition to being easy to install, adopt, and use, next-gen data accuracy solutions must also make it easy to track progress against your enterprise data governance goals.

Business Intelligence & Analytics

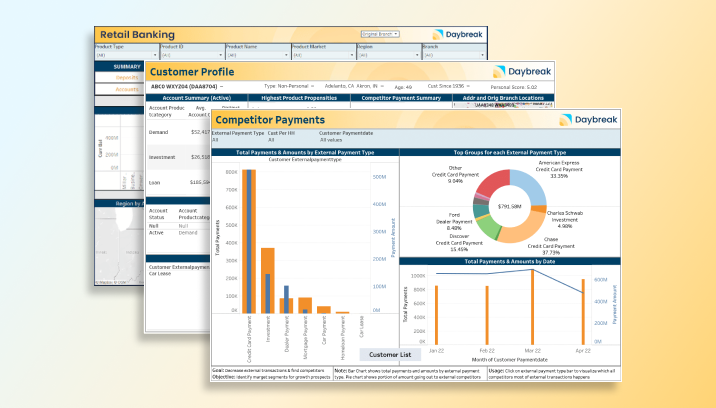

At the end of the day, a data accuracy program will be judged on the extent to which it can enable powerful analytics capabilities across the organization. Having clean data is one thing. Leveraging that data to gain competitive advantage through actionable insights is another. Data accuracy platforms must be capable of preparing data for processing by best-in-class machine learning and data analytics engines.

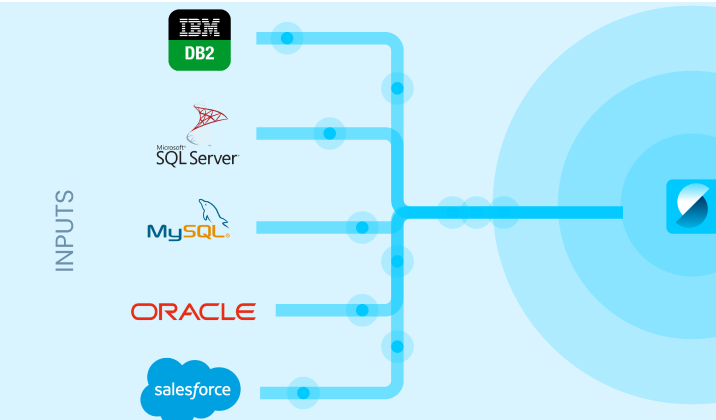

Look for solutions that offer data profiling, data enrichment and master data management tools and that can aggregate and cleanse data across highly disparate data sources and organize it for consumption by analytics engines both inside and outside the data warehouse.

Meeting the Challenges of Digital Transformation and Data Governance

To meet these challenges, companies will be tempted to turn to traditional Data Quality (DQ) and Master Data Management (MDM) solutions for help. However, it is now clear that traditional solutions have not made good on the promise of helping organizations achieve their data quality goals. In 2017, the Harvard Business Review reported that only 3 percent of companies’ data currently meets basic data quality standards even though traditional solutions have been on the market for well over a decade.1

The failure of traditional solutions to help organizations meet these challenges is due to at least two factors. First, traditional solutions typically require exorbitant quantities of time, money, and human resources to implement. Traditional installations can take months or years, and often require prolonged interaction with the IT department. Extensive infrastructure changes need to be made and substantial amounts of custom code need to be written just to get the system up and running. As a result, only a small subset of the company’s systems may be selected for participation in the data quality efforts, making it nearly impossible to demonstrate progress against data quality goals.

Second, traditional solutions struggle to interact with big data, which is an exponentially growing source of low-quality data within modern organizations. This is because traditional systems typically require source data to be organized into relational schemas and to be formatted under traditional data types, whereas most big data is either semi-structured or unstructured in format. Furthermore, these solutions can only connect to data at rest, which ensures that they cannot interact with data streaming directly out of IoT devices, edge services or click logs.

Yet, demand for data quality grows. Gartner predicts that by 2023, intercloud and hybrid environments will realign from primarily managing data stores to integration.

Therefore, a new generation of cloud-native Data Accuracy solutions is needed to meet the challenges of digital transformation and modern data governance. These solutions must be capable of ingesting massive quantities of real-time, semi-structured or unstructured data, and be capable of processing that data both in-place and in-motion.2 These solutions must also be easy for companies to install, configure and use, so that ROI can be demonstrated quickly. As such, the Data Accuracy market will be won by vendors who can empower business users with point- and-click installations, best-in-class usability and exceptional scalability, while also enabling companies to capitalize on emerging trends in big data, IoT and machine learning.

1. Tadhg Nagle, Thomas C. Redman, David Sammon (2017). Only 3% of Companies’ Data Meets Basic Data Quality Standards. Retrieved from https://hbr.org/2017/09/only-3-of-companiesdata-meets-basic-quality-standards

2. Michael Ger, Richard Dobson (2018). Digital Transformation and the New Data Quality Imperative. Retrieved from https://2xbbhjxc6wk3v21p62t8n4d4-wpengine.netdna-ssl.com/wpcontent/uploads/2018/08/Digital-Transformation.pdf

Aunalytics on ActualTech Media's Spotlight Series

Aunalytics on ActualTech Media's Spotlight Series

Aunalytics CMO Katie Horvath sat down with David Davis of ActualTech Media to discuss helping companies with their data challenges. By providing Insights as a Service, we make sure data is managed and organized so it’s ready to answer your business questions.

Why the Data Lake – Benefits and Drawbacks

A data lake solves the problem of having disparate data sources living in different applications, databases and other data silos. While traditional data warehouses brought data together into one place, they typically took quite a bit of time to build due to the complex data management operations required to transform the data as it was transferred into the on-premise infrastructure of the warehouse. This led to the development of the data lake – a quick and easy cloud-based solution for bringing data together into one place. Data lake popularity has climbed significantly since launch, as APIs quickly connect data sources to the data lake to bring data together. Data lakes have redefined ETL (extract, transform and load) as ELT, as data is quickly loaded and transforming it is left for later.

However, data in data lakes is not organized, connected, and made usable as a single source of truth. The problem with disparate data sources has only been moved to a different portion of the process. Data lakes do not automatically combine data from the multiple relocated sources together for analytics, reporting, and other uses. Data lakes lack data management, such as master data management, data quality, governance, and data accuracy technologies that produce trusted data available for use across an organization.

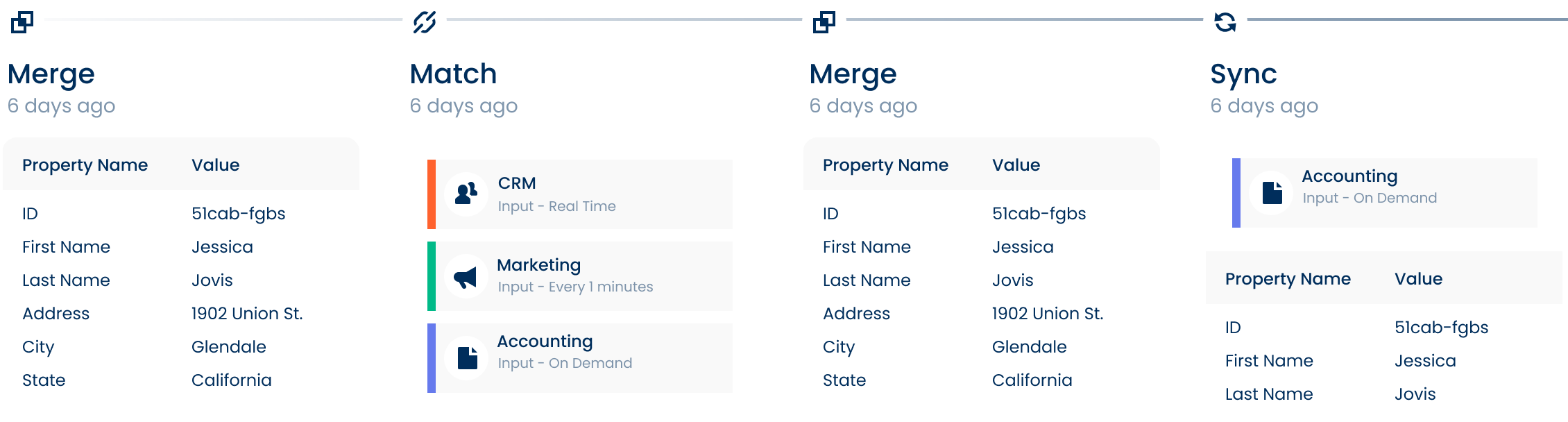

Solutions, such as the cloud-native AunsightTM Golden Record, bring data accuracy, matching, and merging to the lake. In this manner, data lakes can have the data management of data warehouses yet remain nimble as cloud solutions. Ultimately, the goal is to bring the data from the multiple data silos together for better analytics, accurate executive reporting, and customer 360 and product 360 views for better decision-making. This requires a data management solution that normalizes data of different forms and formats, to bring it into a single data model ready for dashboards, analytics, and queries. Pairing a data lake with a cloud-native data management solution with built in governance provides faster data integration success and analytics-ready data than traditional data warehouse technologies.

Aunsight Golden Record takes data lakes a step further by not only aggregating disparate data, but also cleansing data to reduce errors and matching and merging it together into a single source of accurate business information – giving you access to consistent trusted data across your organization in real-time.

Where Can I Find an End-to-End Data Analytics Solution?

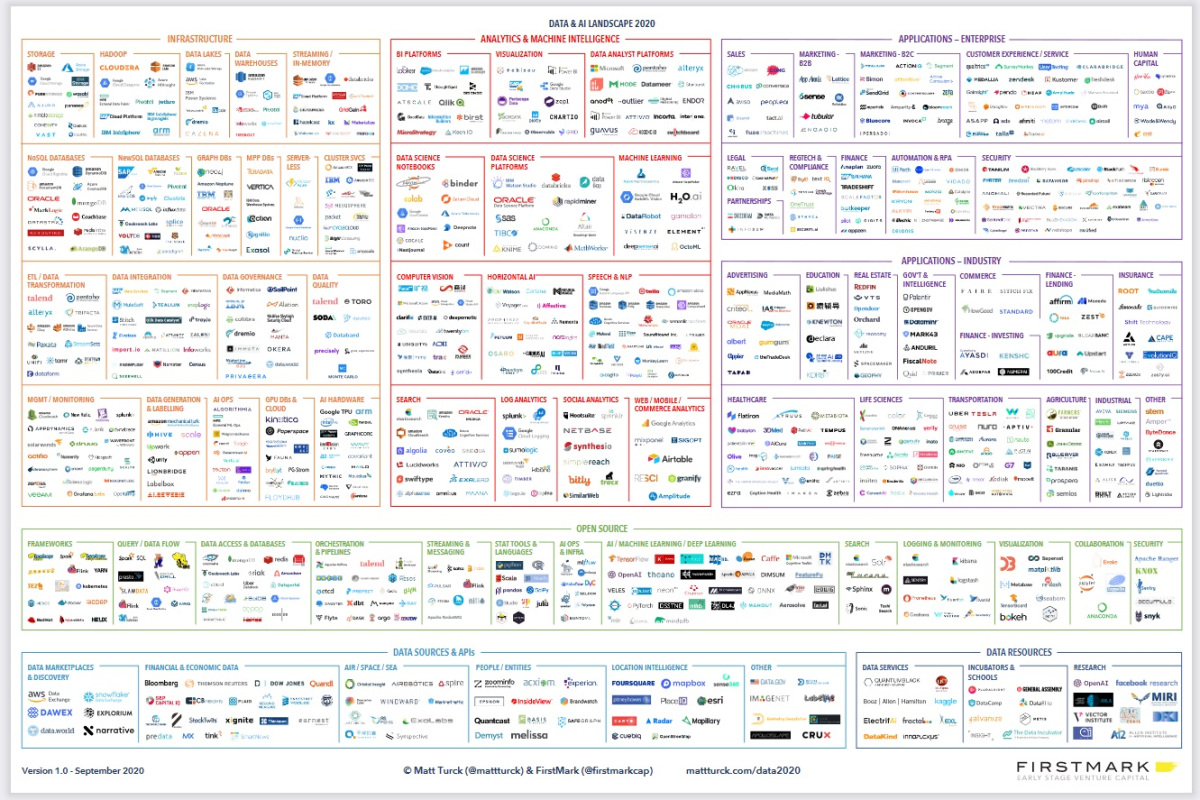

The data analytics landscape has exploded over the past decade with an ever-growing selection of products and services: literally thousands of tools exist to help business deploy and manage data lakes, ETL and ELT, machine learning, and business intelligence. With so many tools to piece together, how do business leaders find the best one or ones? How do you piece them together and use them to get business outcomes? The truth is that many tools are built for data scientists, data engineers and other users with technical expertise. With most tools, if you do not have a data science department, your company is at risk for buying technologies that your team does not have the expertise to use and maintain. This turns digital transformation into a cost center instead of sparking data driven revenue growth.

Image credit: Firstmark

https://venturebeat.com/2020/10/21/the-2020-data-and-ai-landscape/

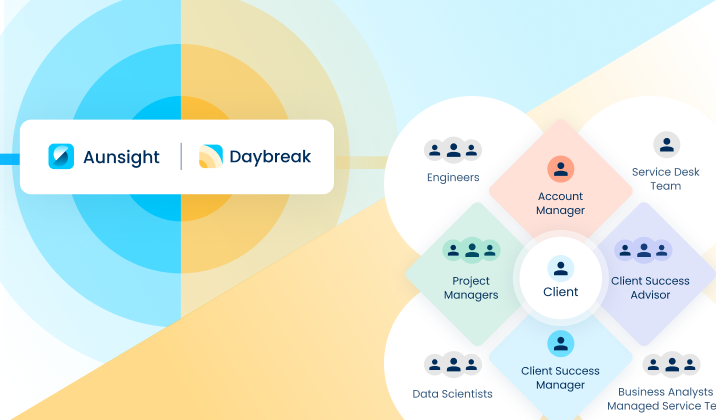

Aunalytics’ side-by-side service model provides value that goes beyond most other tools and platforms on the market by providing a data platform with built-in data management and analytics, as well as access to human intelligence in data engineering, machine learning, and business analytics. While many companies offer one or two similar products, and many consulting firms can provide guidance in choosing and implementing tools, Aunalytics integrates all the tools and expertise in one end-to-end solution built for non-technical business users. The success of a digital transformation project should not be hitting implementation milestones. The success of a digital transformation project should be measured in business outcomes.

White Paper: The 1-10-100 Rule and Privacy Compliance

The 1-10-100 Rule and Privacy Compliance

GDPR, CCPA and the newly coming CPRA require intense data management, or the cost of non-compliance can rise to $1000 per record. You need a data management system with built-in data governance to be able to comply with these regulations.

Aunalytics is a data platform company. We deliver insights as a service to answer your most important IT and business questions.

Featured Content

White Paper: Explaining the 1-10-100 Rule of Data Quality

Explaining the 1-10-100 Rule of Data Quality

The 1-10-100 Rule pertains to the cost of bad quality. Data across a company is paramount to operations, executive decision-making, strategy, execution, and providing outstanding customer service. Yet, many enterprises are plagued by having data riddled with errors and inconsistencies.