Aunalytics Cites Cybersecurity Best Practices for Financial Services as Attacks Rise 118% in 2021

Secure Managed Services Provider Protects Community Banks and Credit Unions as Cybercriminals Double Down on Efforts to Breach Financial Data, Compromise Accounts, and Profit Illegally

South Bend, IN (December 14, 2021) – Aunalytics, a leading data platform company delivering Insights-as-a-Service for enterprise businesses, today announced several cybersecurity best practices for financial services firms, including community banks and credit unions. This guidance follows new data showing hackers will continue to strike these organizations with increasing sophistication, targeting the high value data held by these organizations.

For the past six years, the finance sector has been ranked number one as the most cyberattacked industry. In 2020, attacks against banks and other financial institutions climbed an incredible 238% followed by a further impressive 118% increase in 2021. One example includes Europe-based Carbanak and Cobalt malware campaigns which targeted more than 100 financial institutions in more than 40 countries during five years, yielding criminal profits of more than a billion Euros.

According to the 2021 Modern Bank Heists 4.0 survey, the most common types of attacks hitting the financial services sector in 2020 and 2021 included server attacks, data theft and ransomware cases. It was also found that 57% of surveyed financial institutions revealed an increase in wire transfer fraud, 54% had experienced destructive attacks, 41% had suffered brokerage account take-overs, 51% experienced attacks on target market strategy data, 38% suffered attacks originating from hackers accessing trusted supply chain partners to gain entry into the bank, and 41% had become victim to manipulated timestamps resulting in fund theft.

Increasing the challenge for financial institutions is the fact that the current unemployment rate for IT security professionals is approximately 0%. The scarcity of highly skilled security professionals is compounded by the huge volume of emerging threats. As a result, financial institutions are struggling to keep up with the ever-increasing threat landscape. Digitalization in commerce is driving the need for continuously adapting and evolving skills and knowledge for IT security.

Best Practices for the Defense Against Cyber Threats

As a specialist in secure managed services, the experts at Aunalytics have reviewed the top actions necessary to secure IT, administrative, and environments touching the consumer in the financial services space to prioritize the most important areas that should be considered when securing these businesses against a cyberattack. The following best practices include:

- Continuously updating security technology and protocols as threats evolve and adapt. This means deploying a dedicated full-time security team – not an overworked IT department handling system stability and help desk, while also trying to keep abreast of the latest security threats and technologies, piecing together security tools as a solution. This is not a solution.

- Employment of 24/7/365 monitoring with remote remediation to quickly stop attacks in their tracks.

- Monitoring endpoint devices to stop attacks before they hit networks. User devices are the most likely entry point for attackers to compromise a financial institution due to the high propensity for innocent user error opening doors.

- Monitoring cloud security including application use across the financial institution to be on the lookout for atypical user behavior signaling an attack.

- Monitoring email and Office 365 using tools specially designed to thwart attacks on these platforms, such as expertly recognizing and removing phishing scams before employees have an opportunity to unleash horrible consequences with a rogue mistaken click.

- Having a dedicated security team and SOC, or hire an expert outside managed security services firm that embeds tools, technology and 24/7/365 monitoring to serve as an SOC. This is a must for financial institutions.

- Pushing frequent patches so that user devices are equipped with the latest security protections.

- Adopting deep learning or AI monitoring, mitigation and context investigation that can more quickly identify threats.

- Encrypting data so that it is not compromised even if a breach occurs.

- Using multi-factor authentication to protect against unauthorized access.

- Instructing employees and customers to only access bank data in a secure location over a non-public Internet connection.

- Training employees on cybersecurity threats quarterly.

- Developing a solid business recovery plan for when an attack occurs.

“The challenge with cyber threats is daunting because they can enter the business environment from any number of areas, making comprehensive, multi-layer security strategies and implementations a must,” said Katie Horvath, CMO, Aunalytics. “However, by implementing this recommended regiment of protections, organizations can significantly reduce the risk of a successful attack, safeguarding client data and the organization’s long-term viability.”

Tweet this: .@Aunalytics cites cybersecurity best practices for financial services as attacks rise 118% in 2021 #Dataplatform #Dataanalytics #Dataintegration #Dataaccuracy #ArtificialIntelligence #AI #Masterdatamanagement #MDM #DataScientist #MachineLearning #ML #DigitalTransformation #FinancialServices

About Aunalytics

Aunalytics is a data platform company delivering answers for your business. Aunalytics provides Insights-as-a-Service to answer enterprise and mid-sized companies’ most important IT and business questions. The Aunalytics® cloud-native data platform is built for universal data access, advanced analytics and AI while unifying disparate data silos into a single golden record of accurate, actionable business information. Its DaybreakTM industry intelligent data mart combined with the power of the Aunalytics data platform provides industry-specific data models with built-in queries and AI to ensure access to timely, accurate data and answers to critical business and IT questions. Through its side-by-side digital transformation model, Aunalytics provides on-demand scalable access to technology, data science, and AI experts to seamlessly transform customers’ businesses. To learn more contact us at +1 855-799-DATA or visit Aunalytics at https://www.aunalytics.com or on Twitter and LinkedIn.

PR Contact:

Denise Nelson

The Ventana Group for Aunalytics

(925) 858-5198

dnelson@theventanagroup.com

What is the 1-10-100 rule of data quality?

The 1-10-100 Rule pertains to the cost of bad quality. As digital transformation is becoming more and more prevalent and necessary for businesses, data across a company is integral to operations, executive decision-making, strategy, execution, and providing outstanding customer service. Yet, many enterprises are plagued by having data that is completely riddled with errors, duplicate records containing different information for the same human customer, different spellings for names, different addresses, more than one account for the same vendor (where pricing is not consistent), inconsistent information about a customer’s lifetime value or purchasing history, and reports and dashboards are often not trusted because the data underlying the display is not trusted. By its very nature, business operations often include manual data entry and errors are inherent.

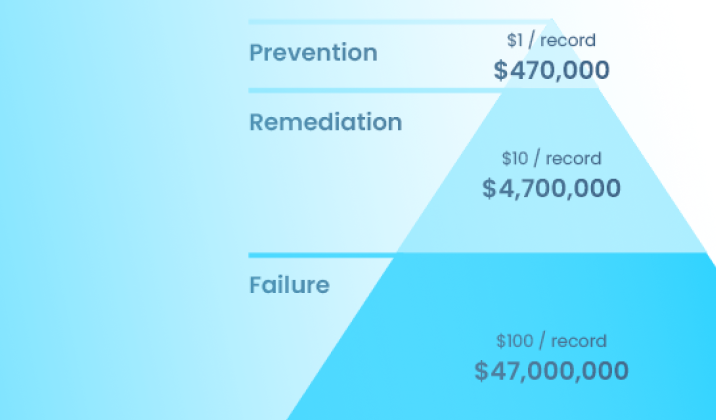

The true cost to an organization of trying to conduct operations and make decisions based upon data riddled with errors is tough to calculate. That’s why G. Loabovitz and Y. Chang set out to conduct a study of enterprises to measure the cost of bad data quality. The 1-10-100 Rule was born from this research.

The Harvard Business Review reveals that on average, 47% of newly created records contain errors significant enough to impact operations.

If we combine the 1-10-100 Rule, using $100 per record for failing to fix data errors, with the Harvard Business Review statistic on the volume of such errors typical for an organization, the cost of poor data quality adds up rapidly. For an enterprise having 1,000,000 records, 470,000 have errors each costing the enterprise $100 per year in opportunity cost, operational cost, etc. This costs the enterprise $47,000,000 per year. Had the enterprise cleansed the data, the data clean-up effort would have cost $4,700,000 and had the records been verified upon entry, the cost would have been $470,000. Inherit in business services are errors caused by human manual data entry. Even with humans eyeballing records as they are entered, errors escape. This is why investing in an automated data management platform with built-in data quality provides a huge cost savings to an organization. Our solution, Aunsight Golden Record, can help organizations mitigate these data issues by automating data integration and cleansing.