Aunalytics Wins a 2021 Digital Innovator Award from Intellyx

South Bend, IN (October 19, 2021) - Aunalytics, a leading data platform company delivering Insights-as-a-Service for enterprise businesses, announced today that it has received a 2021 Digital Innovator Award from Intellyx, an analyst firm dedicated to digital transformation.

As an industry analyst firm that focuses on enterprise digital transformation and the disruptive technology providers that drive it, Intellyx interacts with numerous innovators in the enterprise IT marketplace. Intellyx established the Digital Innovator Awards to honor technology providers that are chosen through the firm’s rigorous briefing selection process and deliver a successful briefing.

“At Intellyx, we get dozens of PR pitches each day from a wide range of vendors,” said Jason Bloomberg, President of Intellyx. “We will only set up briefings with the most disruptive and innovative firms in their space. That’s why it made sense for us to call out the companies that made the cut.”

Aunalytics offers a robust, cloud-native data platform built to enable universal data access, powerful analytics, and AI-driven answers. Customers can turn data into answers with the secure, reliable, and scalable data platform deployed and managed by technology and data experts as a service. The platform represents Aunalytics’ unique ability to unify all the elements necessary to process data and deliver AI end-to-end, from cloud infrastructure to data acquisition, organization, and machine learning models – all managed and run by Aunalytics as a secure managed service. And, while typically large enterprises are in a better position to afford advanced database and analytics technology, Aunalytics pairs its platform with access to its team’s expertise to help mid-market companies compete with enterprise companies.

“We’re honored to have been selected by a respected analyst firm such as Intellyx to receive its 2021 Digital Innovation Awards,” said Rich Carlton, President of Aunalytics. “It is a testament to the value we bring to our customers who are challenged with the need to achieve critical insights to operate more efficiently and gain a competitive edge.”

For more details on the award and to see other winning vendors in this group, visit the 2021 Intellyx Digital Innovator awards page here.

Tweet this: .@Aunalytics Aunalytics Wins a 2021 Digital Innovator Award from Intellyx #Dataplatform #Dataanalytics #Dataintegration #Dataaccuracy #Advancedanalytics #Cloudnative #Artificialintelligence #AI #Masterdatamanagement #MDM #Datascientist #Machinelearning #ML #Digitaltransformation

About Aunalytics

Aunalytics is a data platform company delivering answers for your business. Named a Digital Innovator by analyst firm Intellyx, and selected for the prestigious Inc. 5000 list, Aunalytics provides Insights-as-a-Service to answer enterprise and midsize companies’ most important IT and business questions. The Aunalytics® cloud-native data platform is built for universal data access, advanced analytics and AI while unifying disparate data silos into a single golden record of accurate, actionable business information. Its DaybreakTM industry intelligent data mart combined with the power of the Aunalytics data platform provides industry-specific data models with built-in queries and AI to ensure access to timely, accurate data and answers to critical business and IT questions. Through its side-by-side digital transformation model, Aunalytics provides on-demand scalable access to technology, data science, and AI experts to seamlessly transform customers’ businesses. To learn more contact us at +1 855-799-DATA or visit Aunalytics at https://www.aunalytics.com or on Twitter and LinkedIn.

PR Contact:

Denise Nelson

The Ventana Group for Aunalytics

(925) 858-5198

dnelson@theventanagroup.com

Aunalytics has been included in the 2021 BioCrossroads Book of Data and Organizations

Aunalytics has been included in the 2021 BioCrossroads Book of Data and Organizations directory of Indiana enterprises in the health-data intersection – it includes information on Indiana’s industry, government, health systems, academia, and digital health startups that have tremendous data and technology resources which are driving transformative healthcare and life sciences work locally and globally. The directory now includes snapshots of 40 organizations who control data assets – data sets, data talent, and/or data technology — as well as five cross-organizational initiatives, who collaborating for a specific data project or program.

Aunalytics, Inc. Profile

Role(s)

- Aunalytics is a data platform company delivering answers for your business. Aunalytics provides Insights-as-a-Service to answer enterprise and midsized companies’ most important IT and business questions. The Aunalytics® cloud-native data platform is built for universal data access, advanced analytics and AI while unifying disparate data silos into a single golden record of accurate, actionable business information. Its DaybreakTM industry intelligent data mart combined with the power of the Aunalytics data platform provides industry-specific data models with built-in queries and AI to ensure access to timely, accurate data and answers to critical business and IT questions. Through its side-by-side digital transformation model, Aunalytics provides on-demand scalable access to technology, data science, and AI experts to seamlessly transform customers businesses.

- Our healthcare industry revenue cycle management analytics focus provides AI and machine learning driven insights for reducing insurance reimbursement underpayments and streamlining billing to provide a data driven practice management solution.

Mission

We use data and technology to improve the lives of others.

History

Aunalytics began in 2011 when successful data center entrepreneurs in South Bend, Indiana partnered with data scientists from the University of Notre Dame to provide end to end business and IT analytic solutions backed by a cloud data center. Aunalytics aims to bring data and analytics to mid-market businesses, healthcare systems, and providers by our side by side model to provide clients with access to our data engineer and data science experts to harness data driven insights to better compete with large nationals. We provide healthcare industry specific data models and mine your data for insights to reduce operational costs and drive revenue.

About

- 250 team members in Indiana, Michigan and Ohio

- Enterprise cloud, data analytics, data management and managed services offerings powered by our data platform

- Industry specific data analytics – built for healthcare providers for revenue cycle management

- HIPAA compliant enterprise cloud

Where Can I Find an End-to-End Data Analytics Solution?

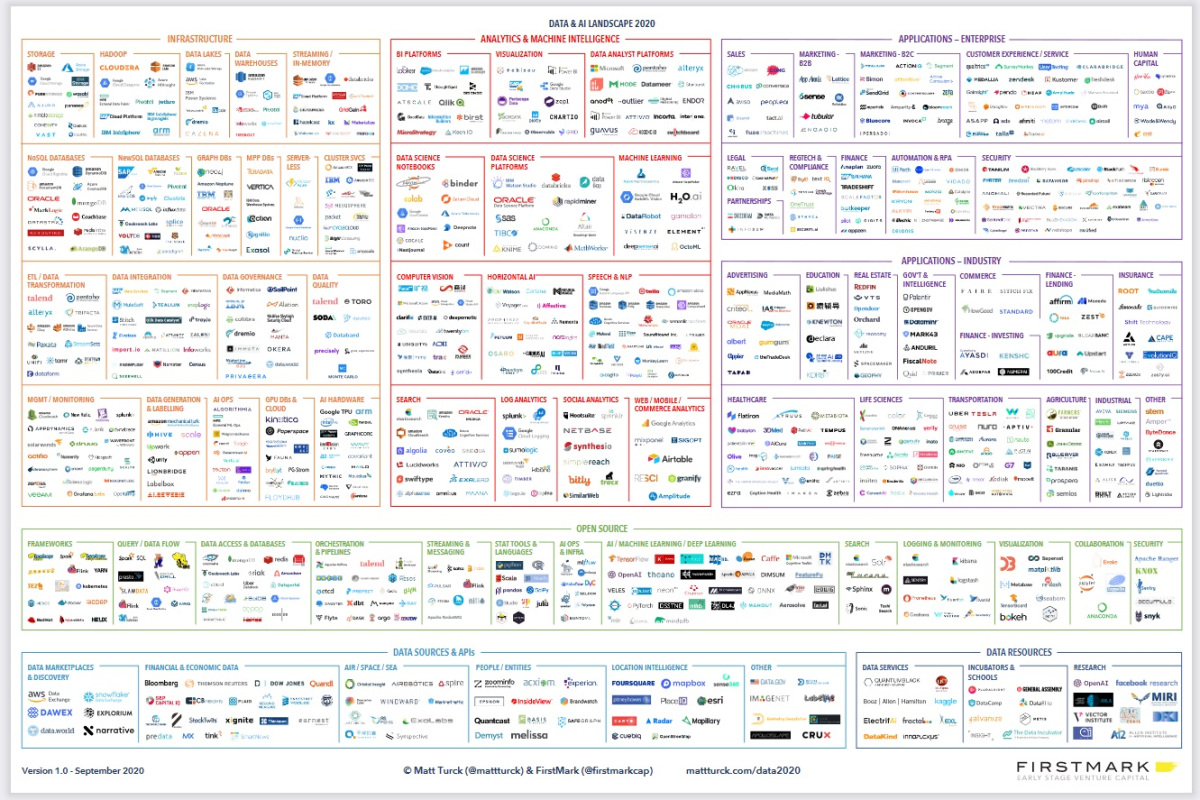

The data analytics landscape has exploded over the past decade with an ever-growing selection of products and services: literally thousands of tools exist to help business deploy and manage data lakes, ETL and ELT, machine learning, and business intelligence. With so many tools to piece together, how do business leaders find the best one or ones? How do you piece them together and use them to get business outcomes? The truth is that many tools are built for data scientists, data engineers and other users with technical expertise. With most tools, if you do not have a data science department, your company is at risk for buying technologies that your team does not have the expertise to use and maintain. This turns digital transformation into a cost center instead of sparking data driven revenue growth.

Image credit: Firstmark

https://venturebeat.com/2020/10/21/the-2020-data-and-ai-landscape/

Aunalytics’ side-by-side service model provides value that goes beyond most other tools and platforms on the market by providing a data platform with built-in data management and analytics, as well as access to human intelligence in data engineering, machine learning, and business analytics. While many companies offer one or two similar products, and many consulting firms can provide guidance in choosing and implementing tools, Aunalytics integrates all the tools and expertise in one end-to-end solution built for non-technical business users. The success of a digital transformation project should not be hitting implementation milestones. The success of a digital transformation project should be measured in business outcomes.

Why it is Important to Have Real-time Data for Analytics

Analytics based upon stale data provides stale results. Fresh data powers up-to-date decision-making.

Real-time data ingestion, integration and cleansing to create a golden record of business information ready for analytics is critical to make better business decisions. This type of ingestion uses technology such as change data capture to bring across only new bits of data – changes to the existing data – as they are made in the business. Streaming allows for efficient processing (cleansing, matching, merging), rather than piling up changes all day and batching them overnight for processing. Streamed data provides changes in real-time so that business decisions are not made based upon yesterday’s data. Although some data sources and systems only support batch transfer, data ingestion technologies are ready for when the core systems modernize. Hopefully the days of stalled analytics waiting for data to arrive will soon be behind us.

Without real-time data management, the time gap causes lags in decision-making that can cost companies time, money, and energy. Real-time data management enables:

- Rapid results

- Faster scaling

- Better decision-making

- More efficient data delivery

- Monetizing windows of opportunity

- Timely actions in response to current insights

- Improved and automated business processes

- Proactive decision-making instead of reactive

- Immediate responses as events unfold

- More personalized customer experiences

Aunalytics Acquires Naveego to Expand Capabilities of its End-to-End Cloud-Native Data Platform to Enable True Digital Transformation for Customers

Naveego Data Accuracy Platform Provides Comprehensive Data Integration, Data Quality, Data Accuracy and Data Governance for Enterprises to Capitalize on Data Assets for Competitive Advantage

South Bend, IN (February 22, 2021) - Aunalytics, a leading data platform company, delivering Insights-as-a-Service for enterprise businesses today announced the acquisition of Naveego, an emerging leader of cloud-native data integration solutions. The acquisition combines the Naveego® Complete Data Accuracy Platform with Aunalytics’ AunsightTM Data Platform to enable the development of powerful analytic databases and machine learning algorithms for customers.

Data continues to explode at an alarming rate and is continuously changing due to the myriad of data sources in the form of artificial intelligence (AI), machine learning (ML), the Internet of Things (IoT), mobile devices and other sources outside of traditional data centers. Too often, organizations ignore the exorbitant costs and compliance risks associated with maintaining bad data. According to a Harvard study, 47 percent of newly created records have some sort of quality issue. Other reports indicate that up to 90 percent of a data analyst’s time is wasted on finding and wrangling data before it can be explored and used for analysis purposes.

Aunalytics’ Aunsight Data Platform addresses this data accuracy dilemma with the introduction of Naveego into its portfolio of analytics, AI and ML capabilities. The Naveego data accuracy offering provides an end-to-end cloud-native platform that delivers seamless data integration, data quality, data accuracy, Golden-Record-as-a-ServiceTM and data governance to make real-time business decisions for customers across financial services, healthcare, insurance and manufacturing industries.

Aunalytics will continue to innovate advanced analytics, machine learning and AI solutions including the company’s newest DaybreakTM offering for financial services. Unlike other “one-size-fits-all” technology solutions, Daybreak was designed exclusively for banks and credit unions with industry specific financial industry intelligence and AI built into the platform. Daybreak seamlessly converts rich, transactional data for end-users into actionable, intelligent data insights to answer customers most important business and IT questions.

“I’m extremely excited to be leading this next chapter of innovation and growth for Aunalytics and to provide our customers with a new era of advanced analytics software and technology service coupled with Naveego’s data accuracy platform,” said Tracy Graham, CEO, Aunalytics. “Now enterprises have the assurance of data they can trust along with actionable analytics to make the most accurate decisions for their businesses to increase customer satisfaction and shareholder value.”

Tweet this: .@Aunalytics Acquires Naveego to Expand Capabilities of its End-to-End Cloud-Native Data Platform to Enable True Digital Transformation for Customers #DataPlatform #DataAnalytics #DataIntegration #DataAccuracy #ArtificialIntelligence #AI #MasterDataManagement #MDM #DataScientist #MachineLearning #ML

About Aunalytics

Aunalytics is a data platform company delivering answers for your business. Aunalytics provides Insights-as-a-Service to answer enterprise and midsized companies’ most important IT and business questions. The Aunalytics® cloud-native data platform is built for universal data access, advanced analytics and AI while unifying disparate data silos into a single golden record of accurate, actionable business information. Its DaybreakTM industry intelligent data mart combined with the power of the Aunalytics data platform provides industry-specific data models with built-in queries and AI to ensure access to timely, accurate data and answers to critical business and IT questions. Through its side-by-side digital transformation model, Aunalytics provides on-demand scalable access to technology, data science, and AI experts to seamlessly transform customers businesses. To learn more contact us at 1-855-799-DATA or visit Aunalytics at http://www.aunalytics.com or on Twitter and LinkedIn.

PR Contact:

Sabrina Sanchez

The Ventana Group for Aunalytics

(925) 785-3014

sabrina@theventanagroup.com

Aunalytics named one of the 2021 Best Tech Startups in South Bend, IN

Aunalytics is named one of the 2021 Best Tech Startups in South Bend, IN

Aunalytics has been named one of South Bend’s Best Tech Startups for 2021 by The Tech Tribune. We are honored to have received this award the past four years. Consideration is based off revenue potential, leadership, brand traction, and the competitive landscape.

About Aunalytics

Aunalytics delivers insights as a service to answer enterprise and midsized companies’ most important IT and business questions. The Aunalytics cloud-native data platform is built for universal data access, powerful analytics, and AI. With the data platform deployed and managed securely, Aunalytics reliably turns disparate data silos into a golden record of accurate business information and uses its powerful analytics to provide answers.

Its Daybreak industry intelligent data mart combines the power of the data platform with industry-specific data models to provide access to timely, accurate data, and answer critical business and IT questions with advanced built-in queries and AI.

Through its side-by-side digital transformation model, Aunalytics provides on-demand scalable access to technology, data science, and AI experts to help transform its partners’ businesses.

The complete list of honorees is featured on The Tech Tribune website.

Daybreak: A Foundation for Advanced Analytics, Machine Learning, and AI

Financial institutions have no shortage of data, and most know that advanced analytics, machine learning, and artificial intelligence (AI) are key technologies that must be utilized in order to stay relevant in the increasingly competitive banking landscape. Analytics is a key component of any digital transformation initiative, with the end goal of providing a superior customer experience. This digital transformation, however, is more than simply digitizing legacy systems and accommodating online/mobile banking. In order to effectively achieve digital transformation, you must be in a position to capitalize one of your greatest competitive assets—your data.

However, getting to successful data analytics and insights comes with its own unique challenges and requirements. An initial challenge concerns building the appropriate technical foundation. Actionable BI and advanced analytics require a modern specialized data infrastructure capable of storing and processing a large magnitude of transactional data in fractions of a second. Furthermore, many financial institutions struggle not only with technical execution, but also lack personnel skillsets required to manage an end-to-end analytics pipeline—from infrastructure to automated insights delivery.

In this article, we examine some of the most impactful applications of advanced analytics, machine learning, and AI for banks and credit unions, and explain how Daybreak for Financial Services solves many of these challenges by providing the ideal foundation for all of your immediate and future analytics initiatives.

Machine Learning and Artificial Intelligence in the Financial Industry

Data analysis provides a wide range of applications that can ultimately increase revenue, decrease expenses, increase efficiency, and improve the customer experience. Here are just a few examples of how data can be utilized within the financial services industry:

- Inform decision-making through business intelligence and self-service analytics:

While banks and credit unions collect a wide variety of data, traditionally, it has not always been easy to access or query this data, which makes uncovering the desired answers and insights difficult and time-consuming. With the proper analytics foundation, employees across the organization can begin to answer questions that directly influence both day-to-day and long-term decision-making.

For example, a data-informed employee could make a determination on where to open a new branch based on where most transactions are taking place currently, or filter customers by home address. They could also determine how to staff a branch appropriately by looking at the times of day that typically have the most customer activity, and trends related to that activity type.

- Improve collection and recovery rates on loans:

By implementing pattern recognition, risk and collection departments can identify and efficiently target the most at-risk loans. Loan departments could also proactively reach out to holders of at-risk loans to discuss refinancing options that would improve the borrower’s ability to pay and decrease the risk of default.

- Improve efficiency and effectiveness of marketing campaigns:

Banks and credit unions can create data-driven marketing program to offer personalized services, next-best products and improve customer onboarding, by knowing which customers to reach out to at the right time. Data-driven marketing allows financial institutions to be more efficient with their marketing dollars and track campaign outcomes better.

- Increased fraud detection abilities

Unfortunately, fraud has become quite common in the financial services industry, and banks and credit unions are investing in new technologies to fight it. Artificial intelligence can be used to detect triggers that indicate fraud in transactional data. This gives institutions the ability to proactively alert customers of suspected fraudulent activities on their accounts to prevent further loss.

These applications of machine learning and AI simply scratch the surface of what outcomes can be achieved by utilizing data, but they are not always easy to implement. Before a financial institution can embark on any advanced analytics project, they must first establish the appropriate foundational analytics infrastructure.

Daybreak is a Foundational Element for Analytics

There are many applications for analytics within the financial services industry, but the ability to utilize machine learning, AI, or even basic business intelligence is limited by data availability and infrastructure. One of biggest challenges to the achievement of advanced analytics initiatives is collecting and aggregating data across multiple disparate sources, including core data. In order to make truly proactive decisions based on data, these sources need to be updated regularly, which is a challenge unto itself.

Additionally, this data needs to be aggregated on an infrastructure built for analytics. For example, a banking core system is built to record large amounts of transactions and is designed to be a system of record. But it is not the optimal type of database structure for analytics.

To solve these challenges, Aunalytics has developed Daybreak, an industry-intelligent data mart built specifically for banks and credit unions to access and take action on the right data at the right time. Daybreak includes all the infrastructure components necessary for analytics, providing financial institutions with an up-to-date, aggregated view of their data that is ready for analysis. It offers users easy-to-use, intuitive analysis tools for people of all experience levels—industry-specific pre-built queries, the Data Explorer guided query tool, or the more advanced SQL Builder. Daybreak also provides easy access to up-to-date, accurate data for more advanced analytics through other modeling and data science tools.

Once this infrastructure is in place, providing the latest, analytics-ready data, the organization’s focus can shift to implementing a variety of analytics solutions, such as advanced KPIs, predictive analytics, targeted marketing, and fraud detection.

Daybreak Uses AI to Enhance Data for Analysis

In addition to providing the foundational infrastructure for analytics, Daybreak also utilizes AI to ensure the data itself is both accurate and ready for analysis. Banks and credit unions collect large amounts of data, both structured and unstructured. Unfortunately, unstructured data is difficult to integrate and analyze. Daybreak uses industry intelligence and AI to convert this unstructured data into a structured tabular format, familiar to analysts. To ensure accuracy, Daybreak utilizes AI to perform quality checks to detect anomalies as data is added or updated.

This industry intelligence also allows Daybreak to create Smart Features from existing data points. Smart Features are completely new data points that are engineered to answer actionable questions relevant to the financial services industry.

Banks and credit unions are fortunate to have a vast amount of data at their disposal, but for many institutions, that data is not always easily accessible for impactful decision-making. That is why it is necessary to build out a strong data foundation in order to take advantage of both basic business intelligence and more advanced machine learning and AI initiatives. Daybreak by Aunalytics provides the ideal, industry intelligent foundation for financial institutions to jump start their journeys toward digital transformation, with the tools they need in order to utilize data to grow their organizations.

Aunalytics’ Client Success Team Drives Measurable Business Value

Transitioning to a more data-driven organization can be a long, complicated journey. A complete digital transformation is more than simply adopting new technologies (though that is an important component.) It requires change at all levels of the business in order to pivot to a more data-enabled, customer-focused mindset. Aunalytics Client Success is committed to helping organizations digitally transform by guiding and assisting them along every step of the journey, and ultimately, allowing them to thrive.

Below, the Client Success (CS) team has answered some of the most common questions about what they do and how they help organizations achieve measurable business outcomes.

What is Client Success?

Aunalytics CS partners with clients to become their trusted advisor, by building a customized CS Partnership Plan utilizing the client’s unique business needs as the core goals. The CS Partnership Plan creates an exceptional client experience by consistently applying a combination of our team and technology to deliver measurable value and business outcomes for our clients.

What are the main goals of the Aunalytics Client Success team?

The Client Success team has four main goals:

- Designing targeted client experiences (by industry, product, and digital transformation stage)

- Recommending targeted next steps by simplifying and synthesizing complex information

- Delivering proactive and strategic support from onboarding to solution launch, ongoing support, and consulting

- Collecting and responding to client feedback on ways our service delivery can evolve

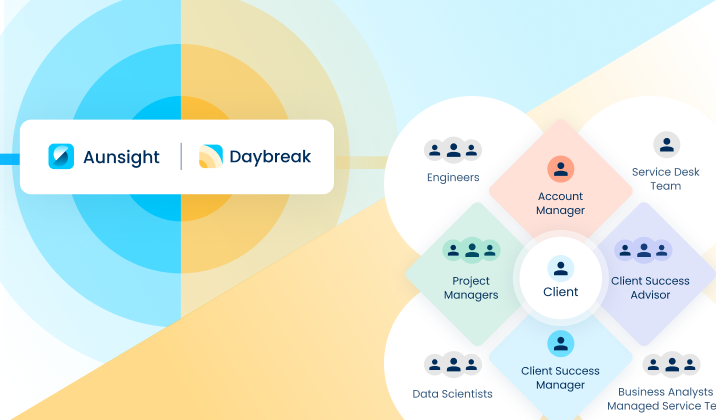

What are the various roles within the CS team?

There are two main roles within the CS team that interact with clients on a regular basis. The first is the Client Success Manager (CSM). The CSM manages day-to-day client tactical needs, providing updates and direction throughout the onboarding process. As the liaison between clients and the Aunalytics team, the CSM synthesizes complex information into clear actions, mitigates any roadblocks that may occur, and clearly communicates project milestones. The CSM works closely with the clients throughout their partnership with Aunalytics, from onboarding, adoption, support, and engagement.

The Client Success Advisor (CSA) works on high-level strategy with each client, translating Aunalytics’ technology solutions into measurable business outcomes. They partner with the clients’ key stakeholders to understand their strategic objectives and create a custom technology roadmap that identifies the specific steps necessary to reach their digital transformation goals. These goals are integrated into the client’s specific CS Partnership Plan to ensure we are aligned on objectives and key results, with clear owners, timelines, and expected outcomes.

How often can a client expect to hear from a CS team member throughout their engagement with Aunalytics?

The CS team is introduced to clients at the initial kickoff meeting and CSMs initiate weekly touch points to ensure onboarding milestones are being met and to communicate action items, responsible parties, and next steps. During these calls the CS team (CS Manager, CS Advisor, Data Engineer, & Business Analyst) will review the project tracker—highlighting recent accomplishments, key priorities, and next steps. Each item is documented, assigned an owner, a timeline, and clear expectations around completion criteria.

What is the Aunalytics “Side-by-Side Support” model and how does the CS team help facilitate this?

Our side-by-side service delivery model provides a dedicated account team, comprised of technology (Data Engineers (DE), Data Scientists (DS), and Business Analysts) and data experts (Product Managers, Data Ingestion Engineers, and Cloud Infrastructure Team), to help transform the way our clients work. The account team collaborates across the company, in service of the client, to ensure that everyone on the team is driving towards the client’s desired outcomes. The CSA captures this information in the CS Partnership Plan to ensure alignment, key priorities, and ownership of time-bound tasks.

The CS team partners with Aunalytics’ Product, Ingestion, and Cloud teams to share client questions, recommendations, and future enhancement ideas. The Partnership Plan is a custom document that evolves with the client’s ever-changing needs. The CSA reviews the Partnership Plan with the client every quarter to capture new goals, document accomplishments, and create feasible timelines for implementation. The goal of the CSA is to create a relationship with the client, in which they view the CSA as a key member of their internal team (e.g. the same side of the table vs. a vendor).

A successful partnership with Aunalytics’ Client Success team is when concrete business outcomes and value are realized by the client, through the use of Aunalytics’ solutions (products + service).

What are some examples of business outcomes that CS has helped Daybreak for Financial Services clients achieve?

In addition to guidance throughout the initial implementation of Daybreak, CS has assisted banks and credit unions with the execution of a number of actionable business cases, such as:

- Assisting Financial Institutions with implementation of self-service analytics programs;

- Improving collection and recovery rates on loans;

- Implementing pattern recognition to make sure that risk and collection departments are efficiently targeting the most at-risk loans;

- Creating data driven marketing programs to offer personalized services, next-best products, and onboarding. Data-driven marketing allows financial institutions to be more efficient with their marketing dollars and track campaign outcomes better;

- Integration with 3rdparty software systems.

The Aunalytics Client Success team is instrumental in helping clients realize measurable business value. Together with Aunalytics’ strong technology stack, this side-by-side delivery model ensures that all clients are equipped with the resources they need to affect positive change within the organization and achieve their digital transformation goals.

Daybreak’s Built-in Industry Intelligence Leads to Faster, More Actionable Insights for Banks and Credit Unions

When choosing a piece of technology for your business, it is important to consider technical specs, features, and performance metrics. But that isn’t all that matters. Even though a product or solution may fit all of your technical requirements, it might not be a great fit for your bank or credit union. As an all-in-one data management and analytics platform, Daybreak is a uniquely strong contender on technical abilities alone, but it also offers features specifically engineered to answer the most prevalent and actionable questions that banks and credit unions currently ask, or should be asking themselves, every day. This is made possible through its built-in industry intelligence.

Industry Intelligence Increases Speed to Insights

Daybreak was developed specifically to help mid-market banks and credit unions compete, leveraging the same big data and analytics technologies and capabilities as the largest, leading institutions in the industry. We know that financial services organizations have a wealth of data—but not all of it is actionable, nor even accessible in its current state. We quickly break down data silos and integrate all the relevant data points from multiple systems; including internal and external, structured and unstructured.

It is Industry Intelligent because our experience knows the kinds of questions an institution needs to answer—that is why over 40% of the data in our model doesn’t exist in the raw customer data. These new data points are called Smart Features.

Over 40% of the data in the Daybreak model doesn’t exist in the raw customer data. It is engineered using Smart Features.

Data Enriched with Smart Features Provides Actionable Insights

One huge advantage that Daybreak offers banks and credit unions is automated data enrichment, through the use of Smart Features. Smart Features are newly calculated data points that didn’t exist before. Daybreak utilizes AI to generate these new data points which allow you to answer more questions about your customers than ever before. For example, Daybreak automatically converts unstructured transactional data into structured Smart Features—converting a long, confusing text string to a transaction category. A transaction that looks like this in the raw data…

| CHASE CREDIT CRD CHECK PYMT SERIAL NUMBER XXXXXXXX |

Is converted to this data point, which can be easily analyzed or used to filter and analyze:

| Customer Number | Account Number | Destination Category | Destination Name | Recurring Payment |

|---|---|---|---|---|

| 123 | 456 | Credit Card | Chase | Yes |

Another category of Smart Features are new values that are calculated based on existing data. For example, Daybreak’s AI scans transactional data and determine which branch is used most often by each individual. It can look at person’s home address and determine which branch is the closest to their home. The AI also scans transactional data for anomalies, and flags any unusual activities, which may indicate a fraud attempt, or a life change. For example, if an account suddenly stops showing direct deposits, perhaps that customer has changed jobs, or is in the process of switching to a different bank.

Since these Smart Features are automatically created, you can start asking actionable questions right away, without waiting for complicated analysis to be performed.

Daybreak has pre-built connectors to most of the major core systems, CRMs, loan and mortgage systems, and other heavily utilized financial industry applications. This means we can get access to your data faster, including granular daily transactional data, and automatically serve it back in a format that you can use to make data-driven decisions. We’ve figured out the difficult foundational part so you don’t have to spend months of development time building a data warehouse from scratch—you will begin getting insights right away.

Daybreak aggregates data from multiple sources, and allows you to receive actionable insights right away.

Scale your Team’s Industry Experience with Daybreak

One challenge that organizations face with any new initiative is how to transfer knowledge and collaborate across departments or locations. If one team member creates a useful analysis or process, it can be difficult to share with others who may want to look at the same type of information. Daybreak’s Query Wizard makes it easy to share intelligence across your business by providing pre-built queries for common banking questions and insights. We also update the pre-built queries regularly with new business-impacting questions as they are formulated. Lastly, in a click of a button, any query is available in SQL code, providing a huge head start to your IT team in more advanced work that they would like to do.

The Daybreak Advantage

Unlike other “one-size-fits-all” technology solutions, Daybreak has financial industry intelligence and AI built into the platform itself. It allows banks and credit unions easy access to relevant data quickly, and without investing excessive time and money to make it happen. With Daybreak, business users have access to actionable data, enriched with Smart Features, in order to start answering impactful questions they’ve never been able to before. Your entire organization can utilize industry-specific insights and collaborate on data analysis.

Daybreak is a game-changer for banks and credit unions. Start making better business decisions by effectively leveraging your data today.