Technology First, Taste of IT Conference

2021 Technology First, Taste of IT Conference

Sinclair Conference Center, Dayton, OH

Aunalytics to Attend 2021 Technology First, Taste of IT Conference

Aunalytics is excited to meet over 400 IT professionals at the Technology First Taste of IT Conference on in Dayton, OH and share information on our Secure Managed Services and Aunsight™ Golden Record offerings with attendees. The new Secure Managed Services stack combines mission critical IT services leveraging zero trust end-to-end security to ensure people and data are protected regardless of location, while Aunsight Golden Record turns siloed data from disparate systems into a single source of truth across your enterprise.

2021 Traverse Connect Annual Economic Summit

2021 Traverse Connect Annual Economic Summit

Grand Traverse Resort & Spa, Traverse City, MI

Aunalytics to Attend 2021 Traverse Connect Annual Economic Summit

Katie Horvath, Chief Marketing Officer at Aunalytics, will be participating in a panel discussion during the Economic Outlook Luncheon at the Traverse Connect Annual Economic Summit. She will provide insights into the technology field both nationally, and specifically in the Greater Traverse Region. Aunalytics is pleased to be the Quick Connect Beverage Sponsor, and share information on our Secure Managed Services and Aunsight™ Golden Record offerings with attendees.

Aunalytics Wins a 2021 Digital Innovator Award from Intellyx

South Bend, IN (October 19, 2021) - Aunalytics, a leading data platform company delivering Insights-as-a-Service for enterprise businesses, announced today that it has received a 2021 Digital Innovator Award from Intellyx, an analyst firm dedicated to digital transformation.

As an industry analyst firm that focuses on enterprise digital transformation and the disruptive technology providers that drive it, Intellyx interacts with numerous innovators in the enterprise IT marketplace. Intellyx established the Digital Innovator Awards to honor technology providers that are chosen through the firm’s rigorous briefing selection process and deliver a successful briefing.

“At Intellyx, we get dozens of PR pitches each day from a wide range of vendors,” said Jason Bloomberg, President of Intellyx. “We will only set up briefings with the most disruptive and innovative firms in their space. That’s why it made sense for us to call out the companies that made the cut.”

Aunalytics offers a robust, cloud-native data platform built to enable universal data access, powerful analytics, and AI-driven answers. Customers can turn data into answers with the secure, reliable, and scalable data platform deployed and managed by technology and data experts as a service. The platform represents Aunalytics’ unique ability to unify all the elements necessary to process data and deliver AI end-to-end, from cloud infrastructure to data acquisition, organization, and machine learning models – all managed and run by Aunalytics as a secure managed service. And, while typically large enterprises are in a better position to afford advanced database and analytics technology, Aunalytics pairs its platform with access to its team’s expertise to help mid-market companies compete with enterprise companies.

“We’re honored to have been selected by a respected analyst firm such as Intellyx to receive its 2021 Digital Innovation Awards,” said Rich Carlton, President of Aunalytics. “It is a testament to the value we bring to our customers who are challenged with the need to achieve critical insights to operate more efficiently and gain a competitive edge.”

For more details on the award and to see other winning vendors in this group, visit the 2021 Intellyx Digital Innovator awards page here.

Tweet this: .@Aunalytics Aunalytics Wins a 2021 Digital Innovator Award from Intellyx #Dataplatform #Dataanalytics #Dataintegration #Dataaccuracy #Advancedanalytics #Cloudnative #Artificialintelligence #AI #Masterdatamanagement #MDM #Datascientist #Machinelearning #ML #Digitaltransformation

About Aunalytics

Aunalytics is a data platform company delivering answers for your business. Named a Digital Innovator by analyst firm Intellyx, and selected for the prestigious Inc. 5000 list, Aunalytics provides Insights-as-a-Service to answer enterprise and midsize companies’ most important IT and business questions. The Aunalytics® cloud-native data platform is built for universal data access, advanced analytics and AI while unifying disparate data silos into a single golden record of accurate, actionable business information. Its DaybreakTM industry intelligent data mart combined with the power of the Aunalytics data platform provides industry-specific data models with built-in queries and AI to ensure access to timely, accurate data and answers to critical business and IT questions. Through its side-by-side digital transformation model, Aunalytics provides on-demand scalable access to technology, data science, and AI experts to seamlessly transform customers’ businesses. To learn more contact us at +1 855-799-DATA or visit Aunalytics at https://www.aunalytics.com or on Twitter and LinkedIn.

PR Contact:

Denise Nelson

The Ventana Group for Aunalytics

(925) 858-5198

dnelson@theventanagroup.com

Data Scientists: Take Data Wrangling and Prep Out of Your Job Description

It is a well-known industry problem that data scientists typically spend at least 80% of their time finding and prepping data instead of analyzing it. The IBM study that originally published this statistic dates to even before most organizations adopted separate best-of-breed applications in functional business units. Typically, there is not one central data source used by the entire company, but instead there exist multiple data silos throughout an organization due to decentralized purchasing and adoption of applications best suited for a particular use or business function. This means that now data scientists must cobble together data from multiple sources, often having separate “owners,” wrangle IT and the various data owners to extract and get the data to their analytics, and then make it usable. Having Data Analysts and Data Scientists work on building data pipelines is not only a complex technical problem but also a complex political problem.

To visualize analytics results, a data analyst is not expected to build a new dashboard application. Instead, Tableau, Power BI and other out-of-the-box solutions are used. So why require a data analyst to build data pipelines instead of using an out-of-the-box solution that can build, integrate, and wrangle pipelines of data in a matter of minutes? Because it saves time, money, and angst to:

- Automate data pipeline building and wrangling for analytics-ready data to be delivered to the analysts;

- Automatically create a real-time stream of integrated, munched and cleansed data, and feed it into analytics in order to have current and trusted data available for immediate decision-making;

- Have automated data profiling to give analysts insights about metadata, outliers, and ranges and automatically detect data quality issues;

- Have built-in data governance and lineage so that a single piece of data can be tracked to its source; and

- Automatically detect when changes are made to source data and update the analytics without blowing up algorithms.

Building data pipelines is not the best use of a data scientist’s time. Data scientists instead need to spend their highly compensated time developing models, examining statistical results, comparing models, checking interpretations, and iterating on the models. This is particularly important given that there exists a labor shortage of these highly skilled workers in both North America and Europe and adding more FTEs into the cost of analytics projects makes them harder for management to justify.

According to David Cieslak, Ph.D., Chief Data Scientist at Aunalytics, without investment in automation and data democratization, the rate at which one can execute on data analytics use cases — and realize the business value — is directly proportionate to the number of data engineers, data scientists, and data analysts hired. Using a data platform with built-in data integration and cleansing to automatically create analytics-ready pipelines of business information allows data scientists to concentrate on creating analytical results. This enables companies to rapidly build insights based upon trusted data and deliver those insights to clients in a fraction of the time that it would take if they had to manually wrangle the data.

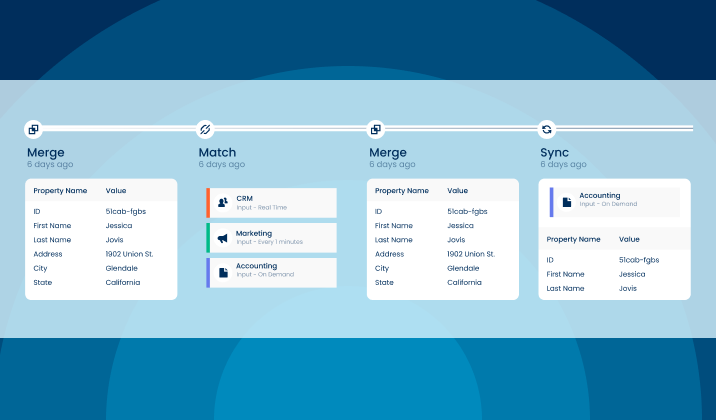

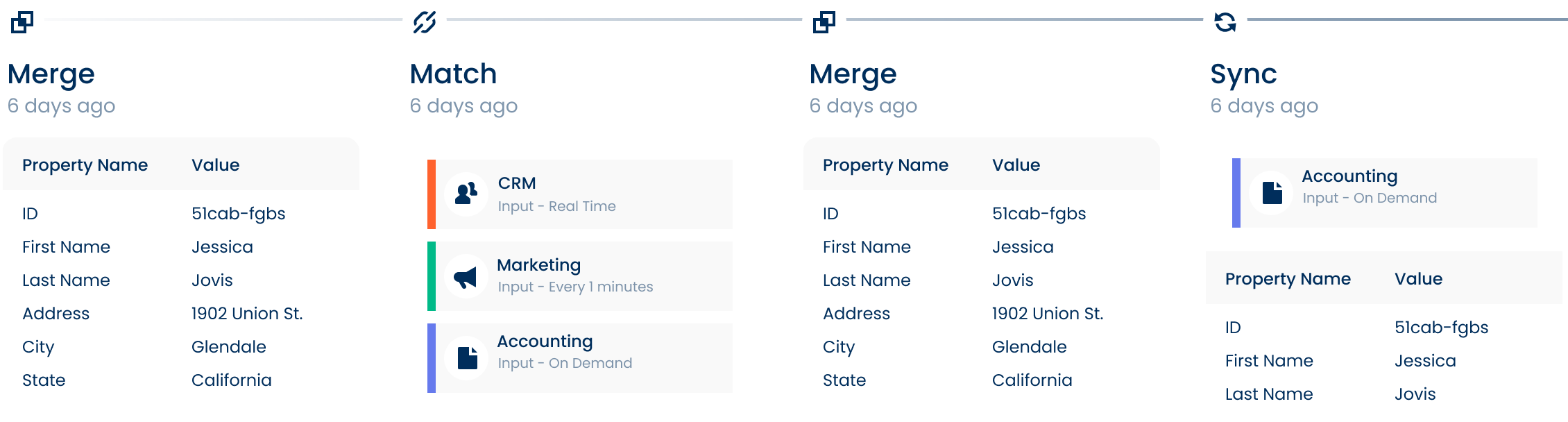

Our Solution: Aunsight Golden Record

Aunsight™ Golden Record turns siloed data from disparate systems into a single source of truth across your enterprise. Powered with data accuracy, our cloud-native platform cleanses data to reduce errors, and Golden Record as a Service matches and merges data together into a single source of accurate business information – giving you access to consistent trusted data across your organization in real-time. With this self-service offering, unify all your data to ensure enterprise-wide consistency and better decision making.

Meeting the Challenges of Digital Transformation and Data Governance

To meet these challenges, companies will be tempted to turn to traditional Data Quality (DQ) and Master Data Management (MDM) solutions for help. However, it is now clear that traditional solutions have not made good on the promise of helping organizations achieve their data quality goals. In 2017, the Harvard Business Review reported that only 3 percent of companies’ data currently meets basic data quality standards even though traditional solutions have been on the market for well over a decade.1

The failure of traditional solutions to help organizations meet these challenges is due to at least two factors. First, traditional solutions typically require exorbitant quantities of time, money, and human resources to implement. Traditional installations can take months or years, and often require prolonged interaction with the IT department. Extensive infrastructure changes need to be made and substantial amounts of custom code need to be written just to get the system up and running. As a result, only a small subset of the company’s systems may be selected for participation in the data quality efforts, making it nearly impossible to demonstrate progress against data quality goals.

Second, traditional solutions struggle to interact with big data, which is an exponentially growing source of low-quality data within modern organizations. This is because traditional systems typically require source data to be organized into relational schemas and to be formatted under traditional data types, whereas most big data is either semi-structured or unstructured in format. Furthermore, these solutions can only connect to data at rest, which ensures that they cannot interact with data streaming directly out of IoT devices, edge services or click logs.

Yet, demand for data quality grows. Gartner predicts that by 2023, intercloud and hybrid environments will realign from primarily managing data stores to integration.

Therefore, a new generation of cloud-native Data Accuracy solutions is needed to meet the challenges of digital transformation and modern data governance. These solutions must be capable of ingesting massive quantities of real-time, semi-structured or unstructured data, and be capable of processing that data both in-place and in-motion.2 These solutions must also be easy for companies to install, configure and use, so that ROI can be demonstrated quickly. As such, the Data Accuracy market will be won by vendors who can empower business users with point- and-click installations, best-in-class usability and exceptional scalability, while also enabling companies to capitalize on emerging trends in big data, IoT and machine learning.

1. Tadhg Nagle, Thomas C. Redman, David Sammon (2017). Only 3% of Companies’ Data Meets Basic Data Quality Standards. Retrieved from https://hbr.org/2017/09/only-3-of-companiesdata-meets-basic-quality-standards

2. Michael Ger, Richard Dobson (2018). Digital Transformation and the New Data Quality Imperative. Retrieved from https://2xbbhjxc6wk3v21p62t8n4d4-wpengine.netdna-ssl.com/wpcontent/uploads/2018/08/Digital-Transformation.pdf

Webinar: Do You Trust Your Data?

Do You Trust Your Data?

Presented by: Katie Horvath, CMO, Aunalytics

According to leading industry experts, 70% of digital transformation projects fail. Yet, companies successful with data-driven initiatives are realizing a 20-30% increase in customer satisfaction along with profit margins between 20-50%. So, what’s the secret to success?

In this session we will discover the keys to successful digital transformation and how to harness the power of your data to increase customer satisfaction and shareholder value.

Please fill out the form below to watch the webinar recording.

All attendees will be entered to win a YETI® SOFT COOLER

Do You Trust Your Data?

Date: October 7th at 11:00 am EDT

Presented by: Katie Horvath, CMO, Aunalytics

Platform: Zoom