Workflow Builder - Exercise 1

Exercise Overview

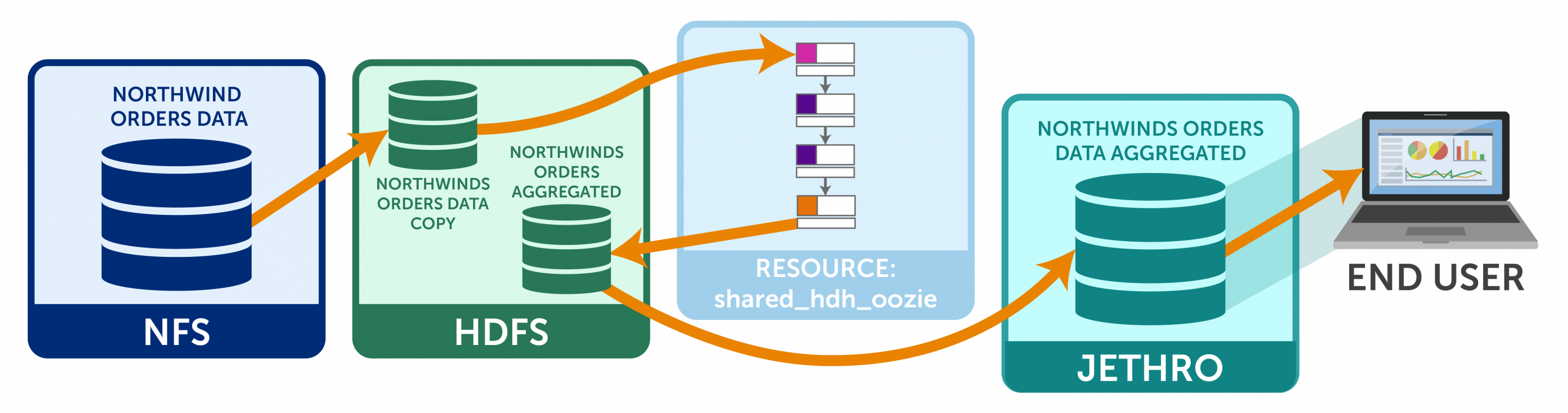

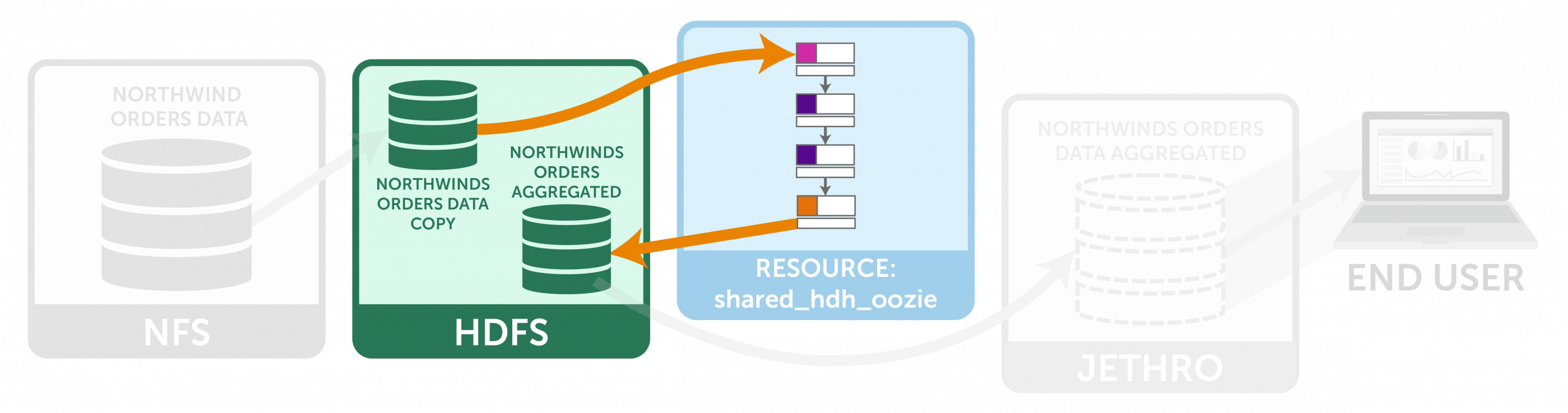

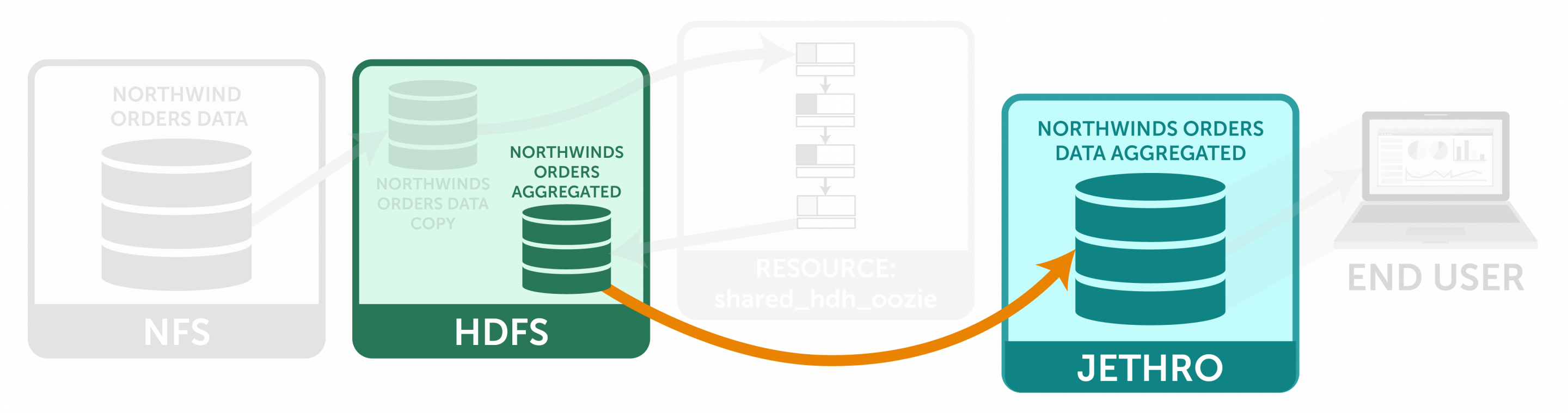

In this tutorial, you will learn how to use the Workflow Builder to orchestrate multiple operations; you will learn how to create a workflow, move data between sources, add a dataflow to prepare and clean a dataset, and finally, publish a dataset to an accelerated data layer for consumption by business intelligence tools or further analysis.

Exercise #1

Objectives:

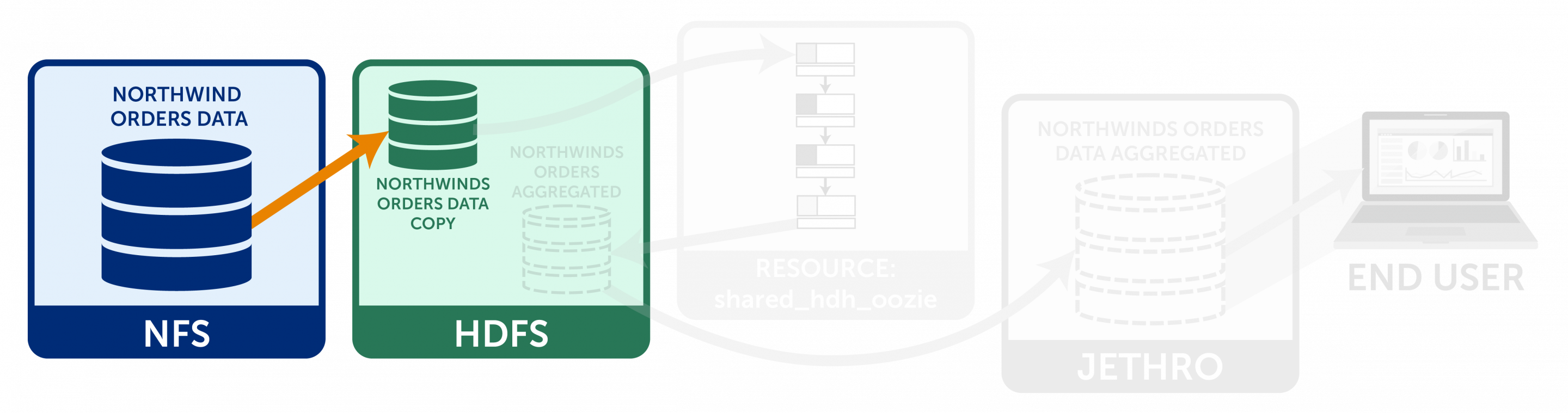

- Copy a dataset from NFS to HDFS

- Run a dataflow on the dataset

- Copy the output dataset to Jethro

A) Create a new Workflow

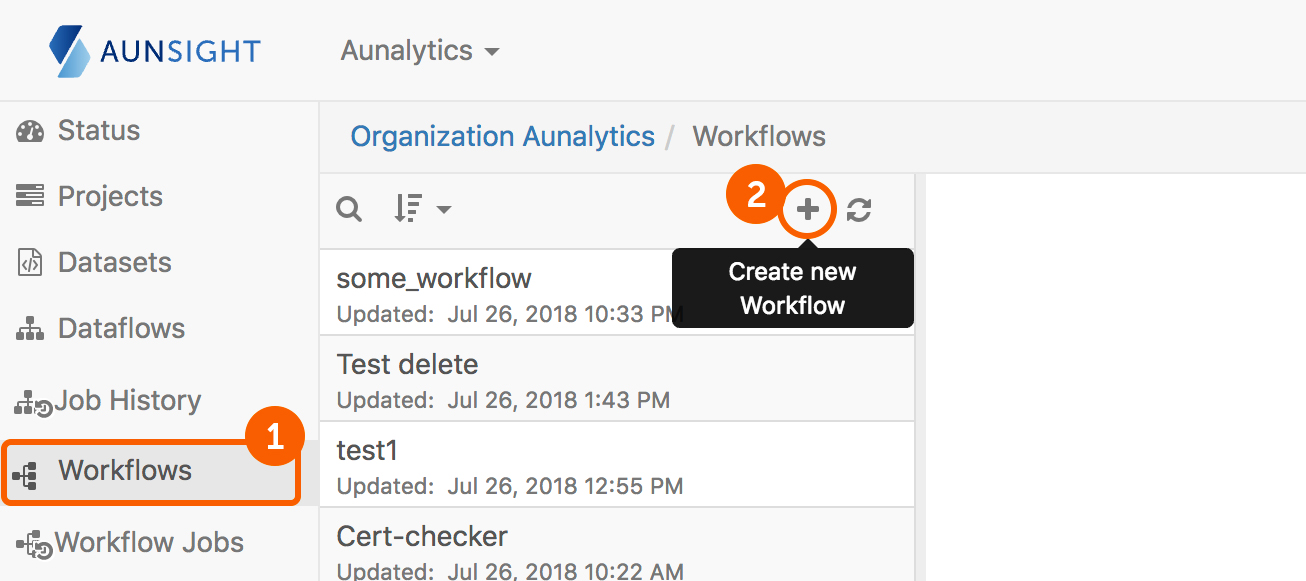

- After logging in to Aunsight and selecting the relevant organization, click on Workflows in the menu bar on the left side of the screen.

- Click the plus button to create a new workflow.

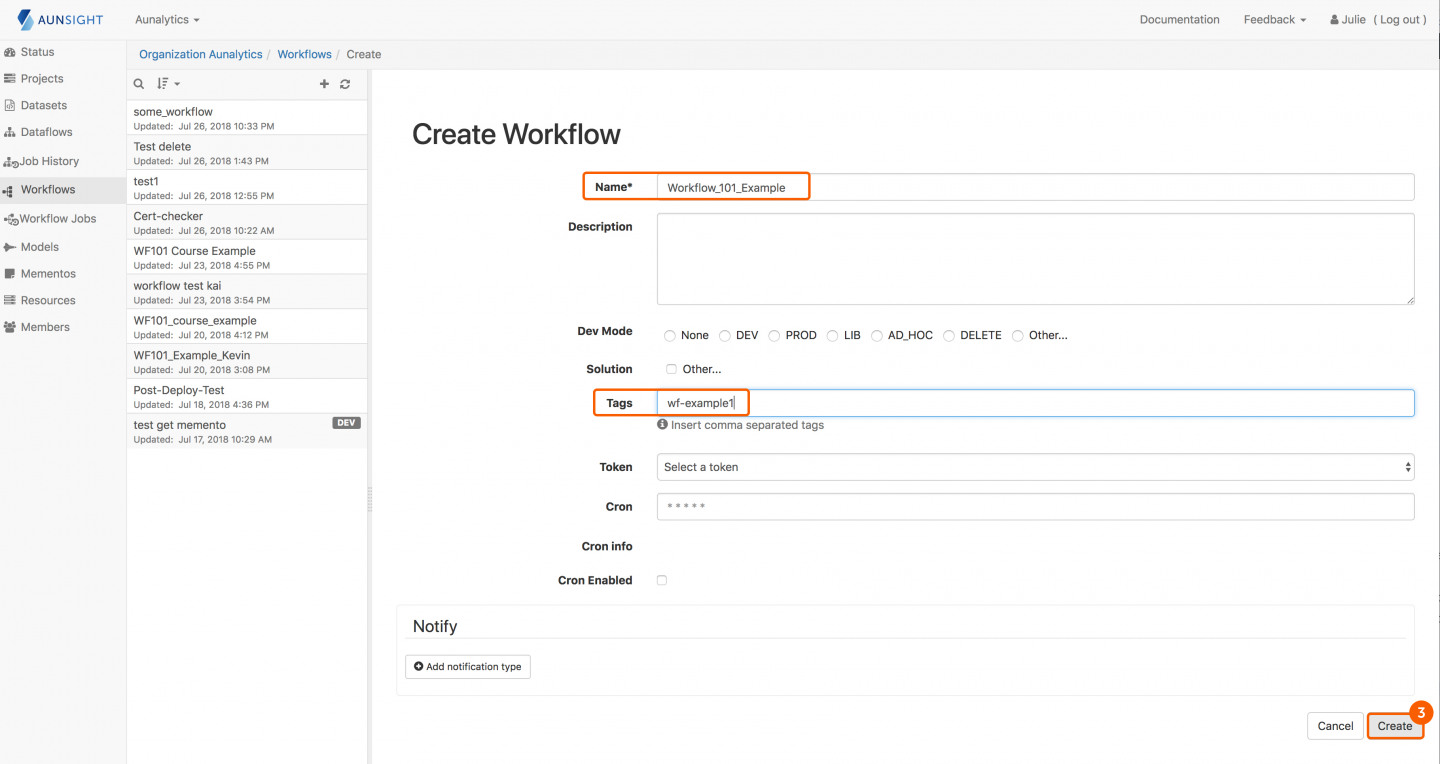

- Enter some descriptive for your workflow such as Workflow101 Example. In the tags, enter wf-example1. When finished, click Create.

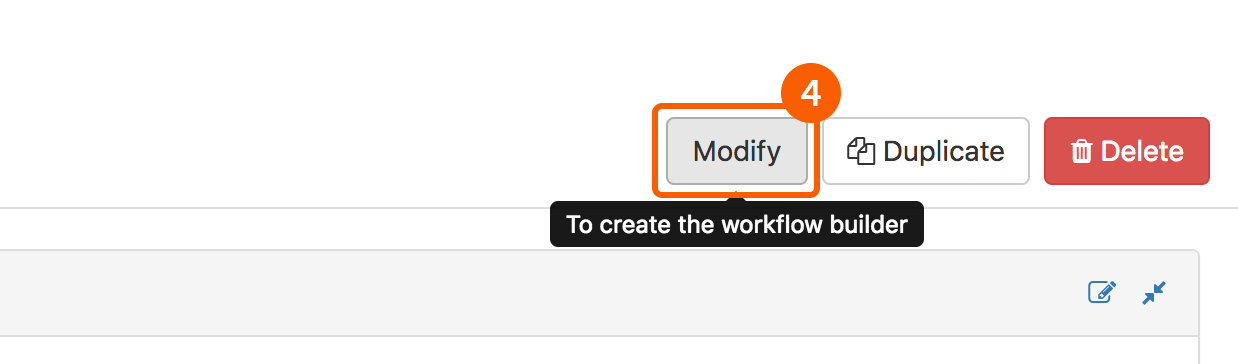

- You should be at the Workflow page for the workflow you just created. Click the Modify button which will bring you to the Workflow Builder main screen.

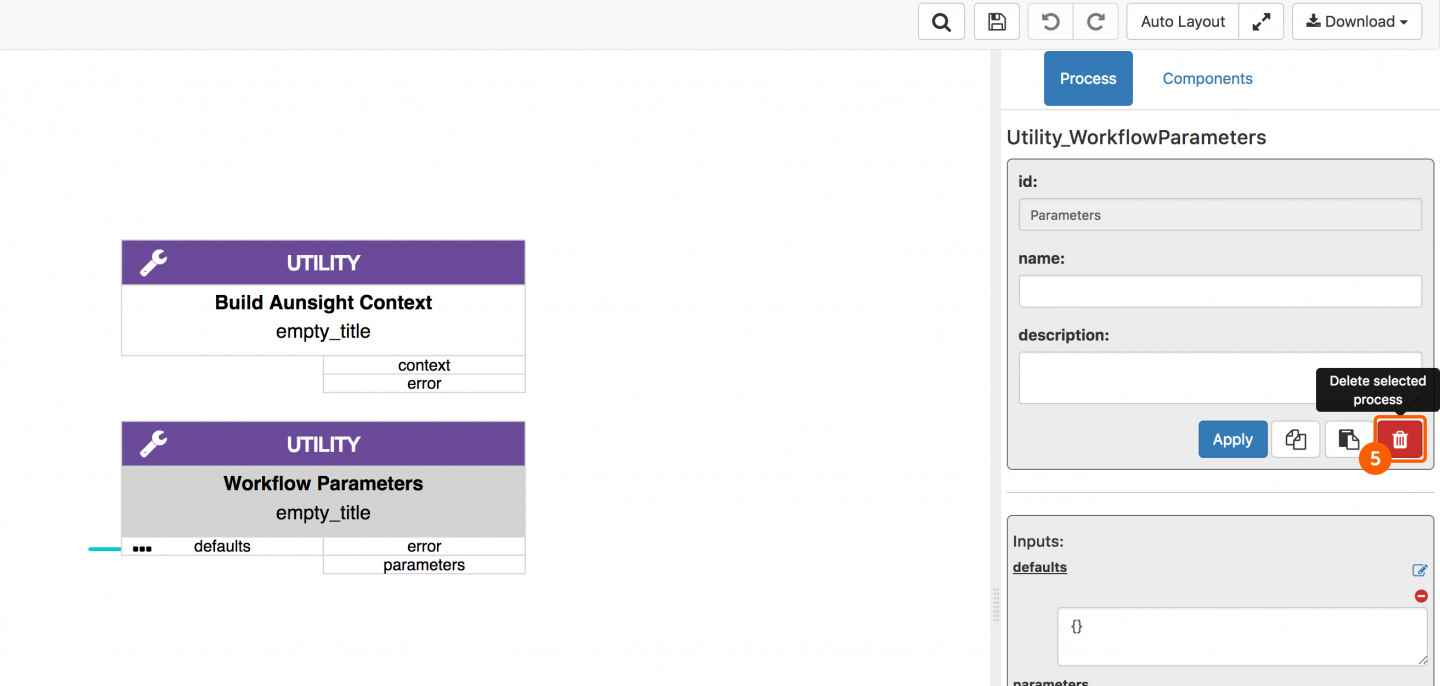

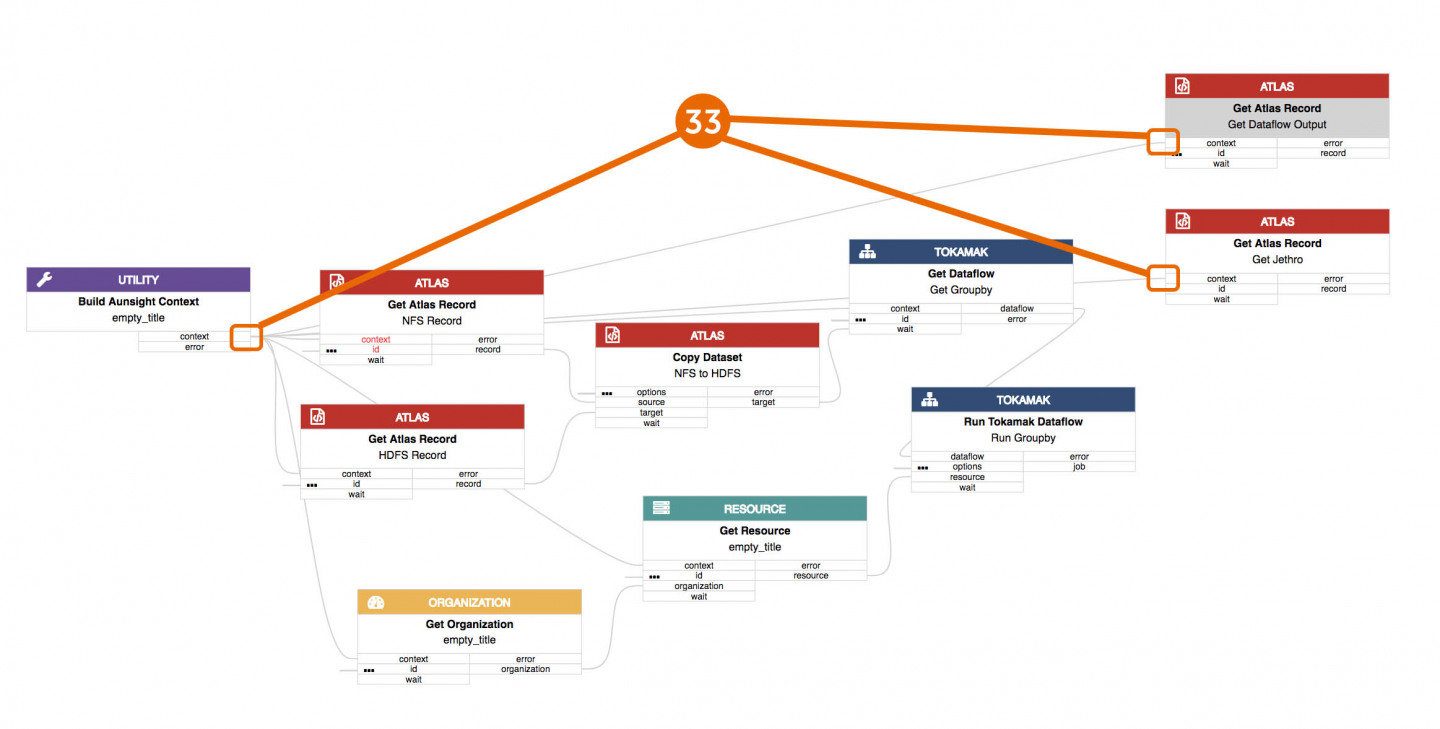

- You will see two components, Build Aunsight Context and Workflow Parameters. For simplicity, you may delete the Workflow Parameters component by clicking on it to highlight, and then pressing the red trashcan button in the right side panel

B) Move data from source file system (NFS) into target file system for processing (HDFS)

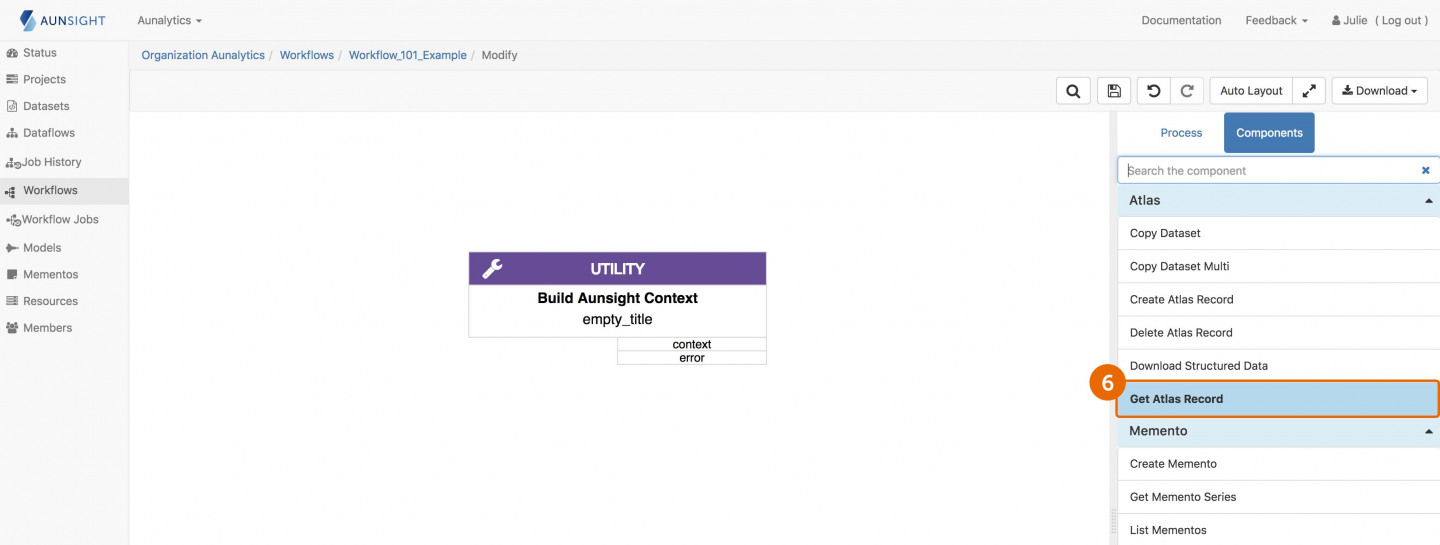

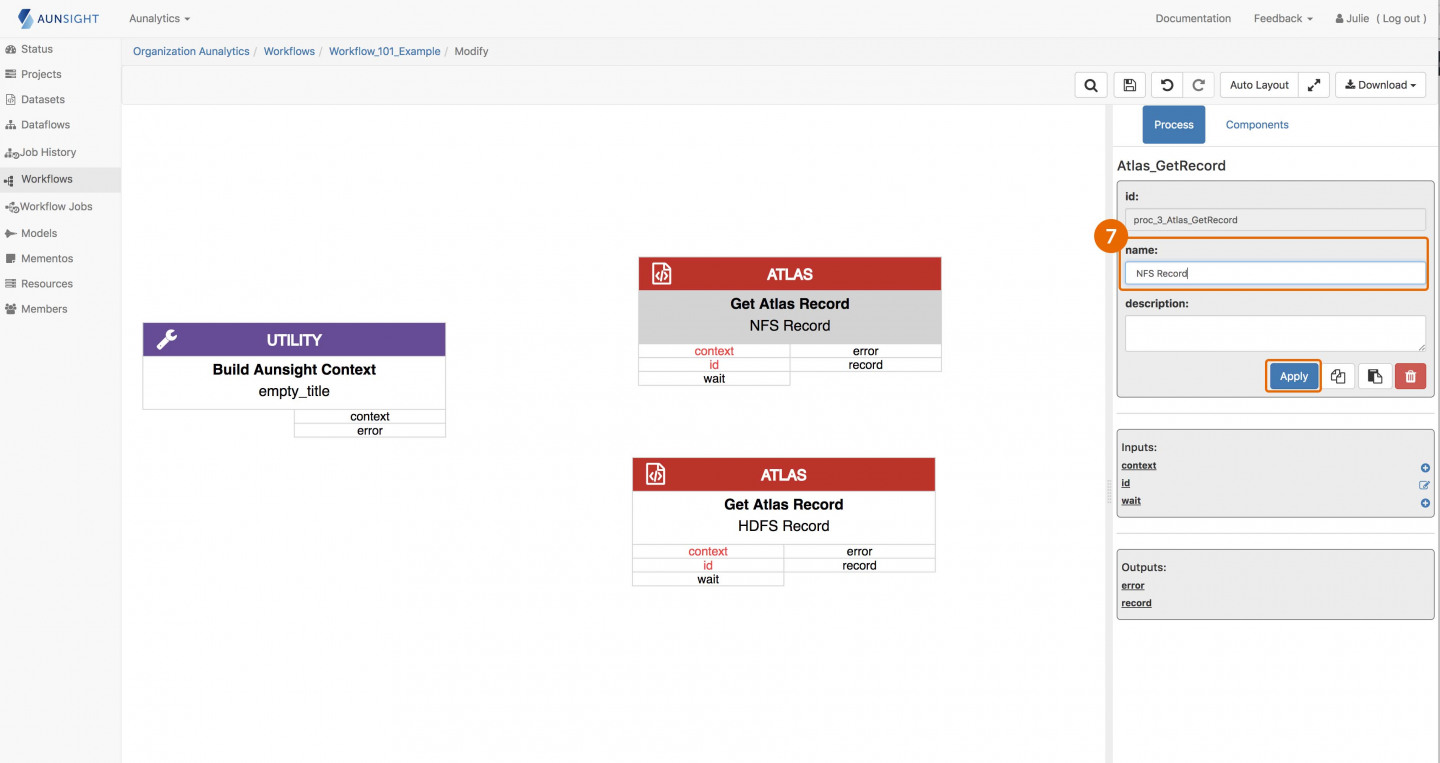

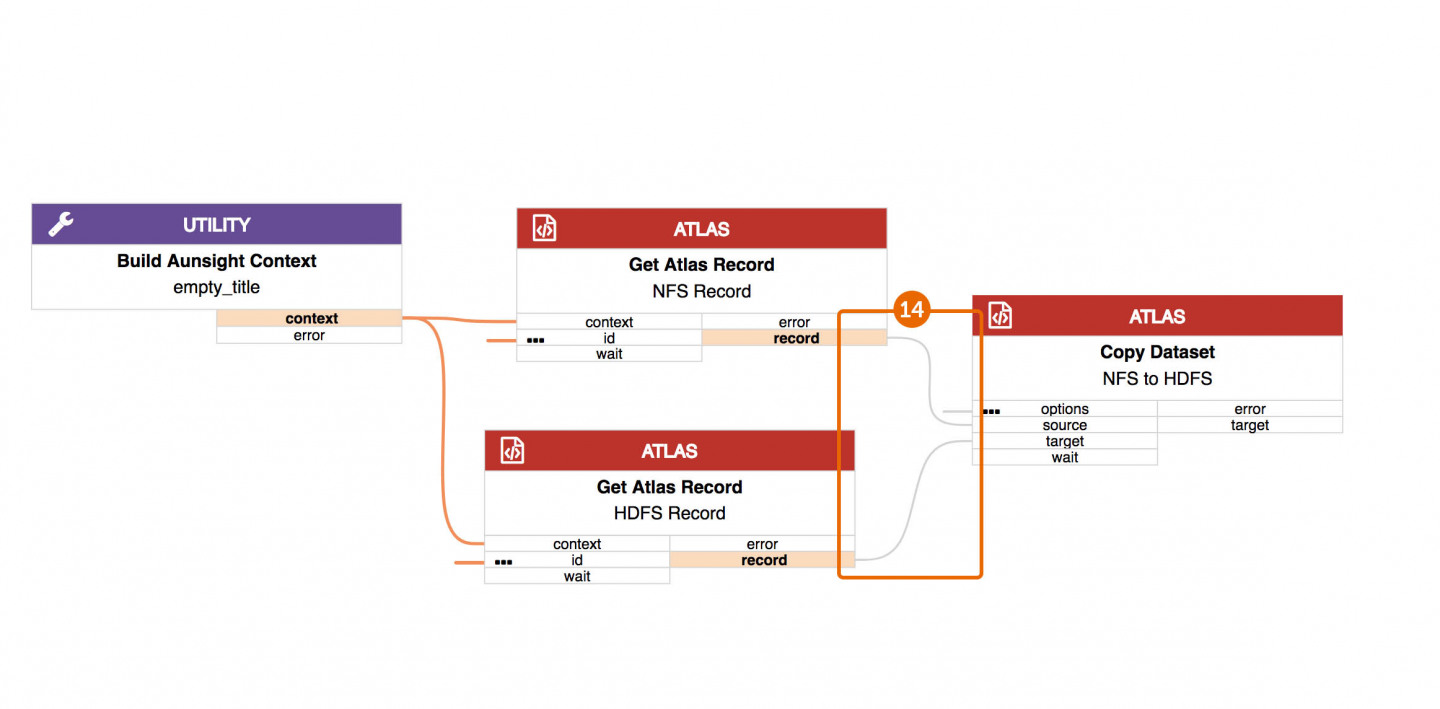

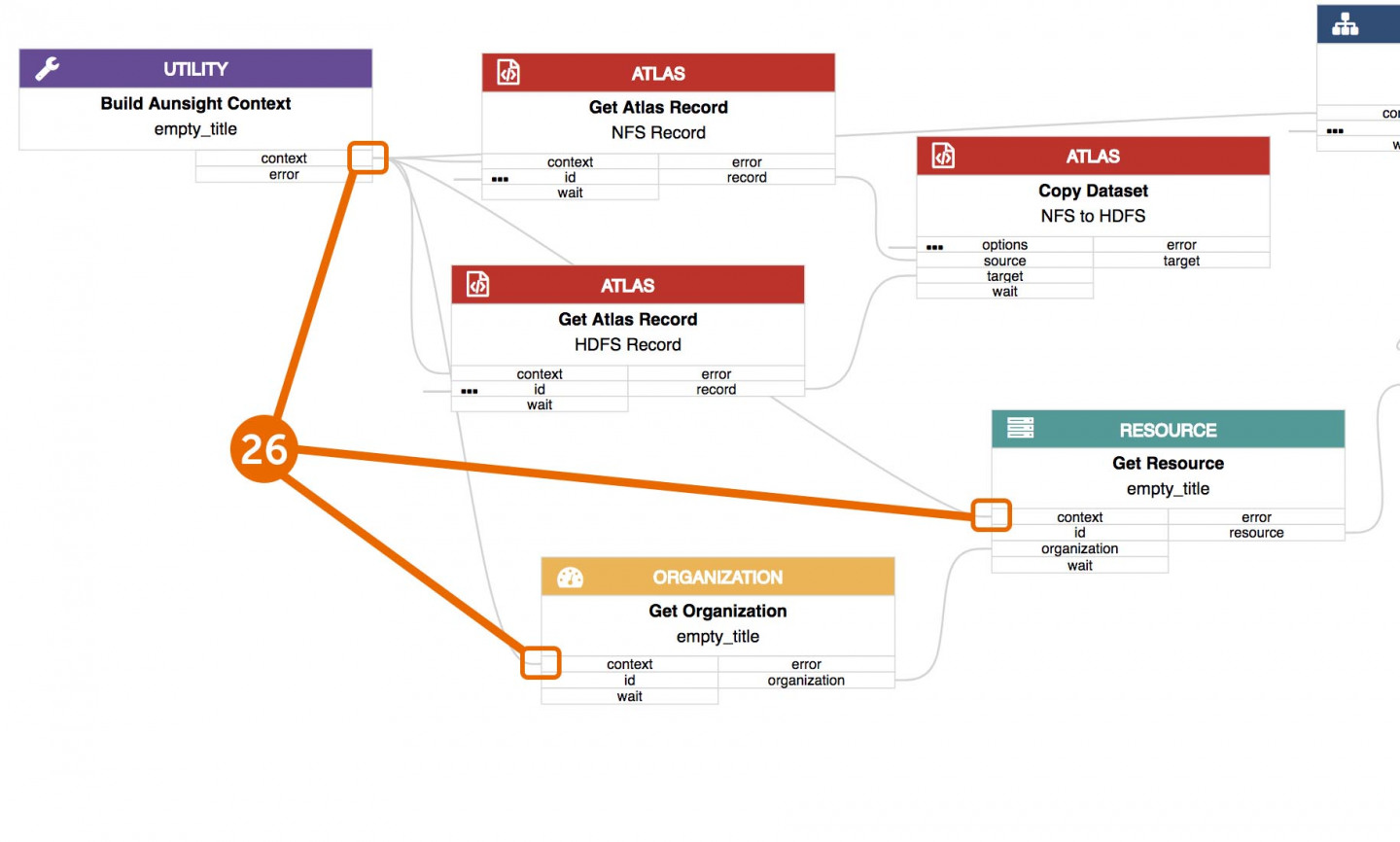

- On the right side panel click Components and select Get Atlas Record (you might use the search functionality to simplify). The Get Atlas Record component pulls metadata about your dataset from the centralized Aunsight platform. You will need two of these components for the next step, so after adding the first one, repeat this step to select a second Get Atlas Record component.

- You should now have two of these components in your workflow; name one NFS Record for the original dataset located in NFS, and the other HDFS Record, which will be a new dataset that will reside in HDFS.

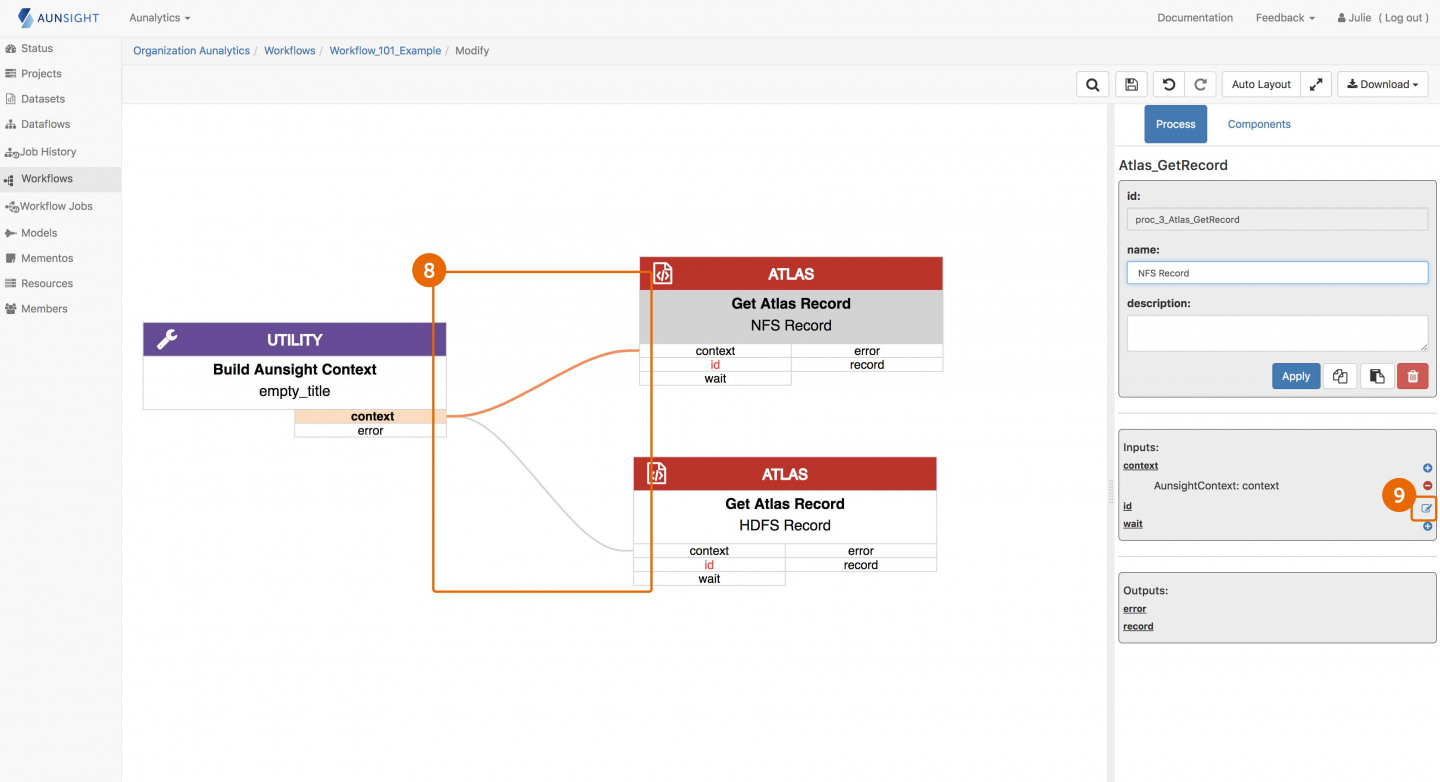

- From the Build Aunsight Context component, connect the outbound Context port to the inbound Context port of each of the Get Atlas Record components by clicking and dragging a line from the context on the Build Aunsight Context block to the context on the NFS Record block.

TIP: To disconnect components, select one of the connected pair. Then on the right side panel, scroll to the Inputs or Outputs section and click on the red delete sign next to the context you are trying to remove. (Hint: Hovering the mouse over the delete sign will highlight the connection that will be deleted.) - Select the Get Atlas Record component named NFS Record. Then on the right side panel, scroll to the Inputs section and click on the Edit (pen and paper) symbol next to ID.

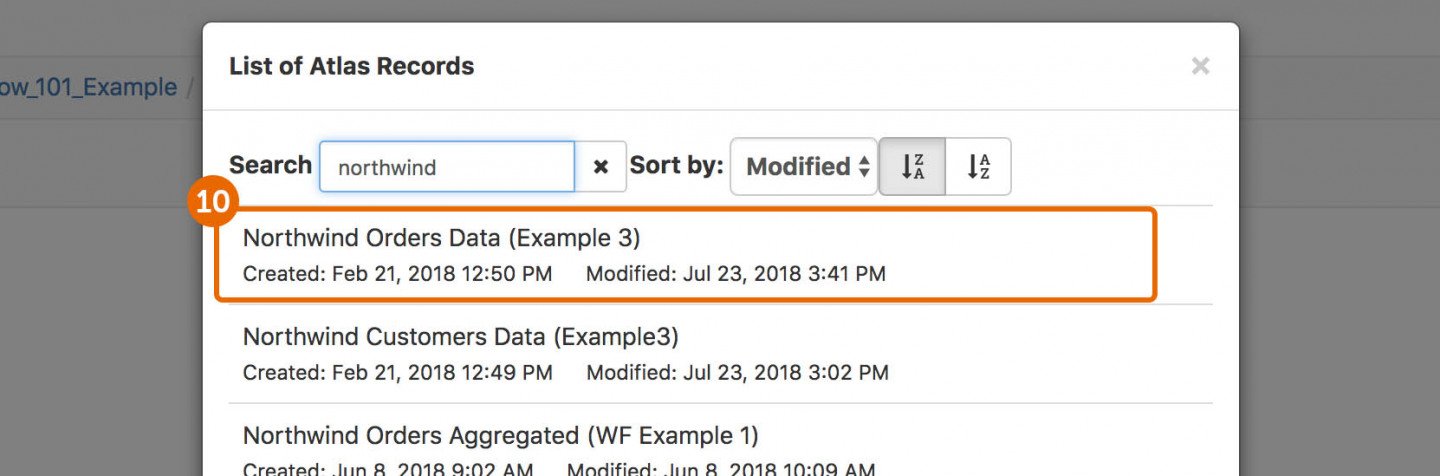

- Using the search screen that pops up, select Northwind Orders Data (Example 3).

- Create a dataset (see tutorial on copying datasets) called Workflow 101 HDFS Landing Dataset and tag it with wf-example1.

- Go back and edit your workflow by clicking modify.

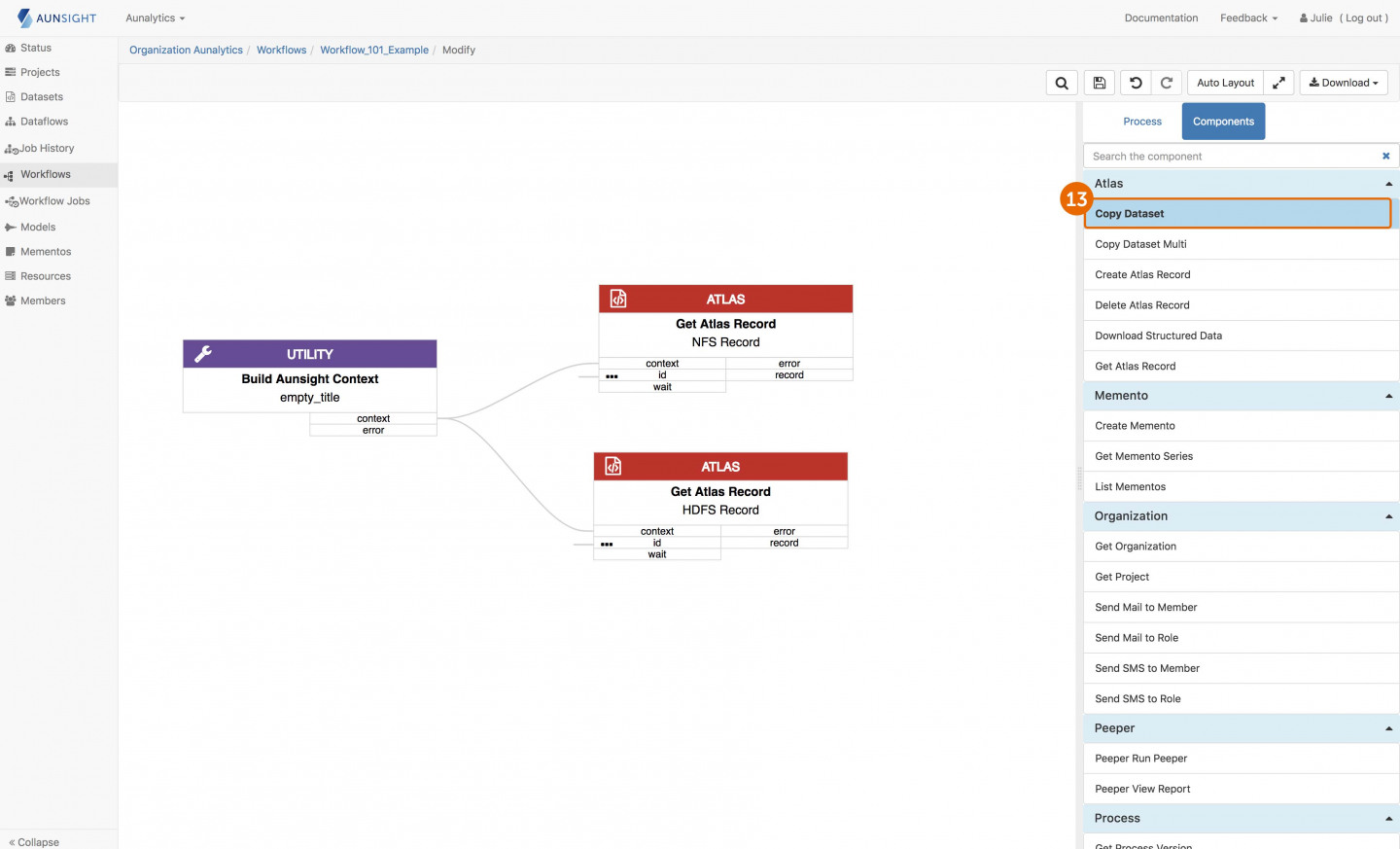

- From the Components panel, add Copy Dataset and name it move data from NFS to HDFS”.

- Connect the outbound record port from the the NFS record to the source inbound port on Copy Dataset, and connect the outbound “record” port from the HDFS record to the target inbound port on Copy Dataset.

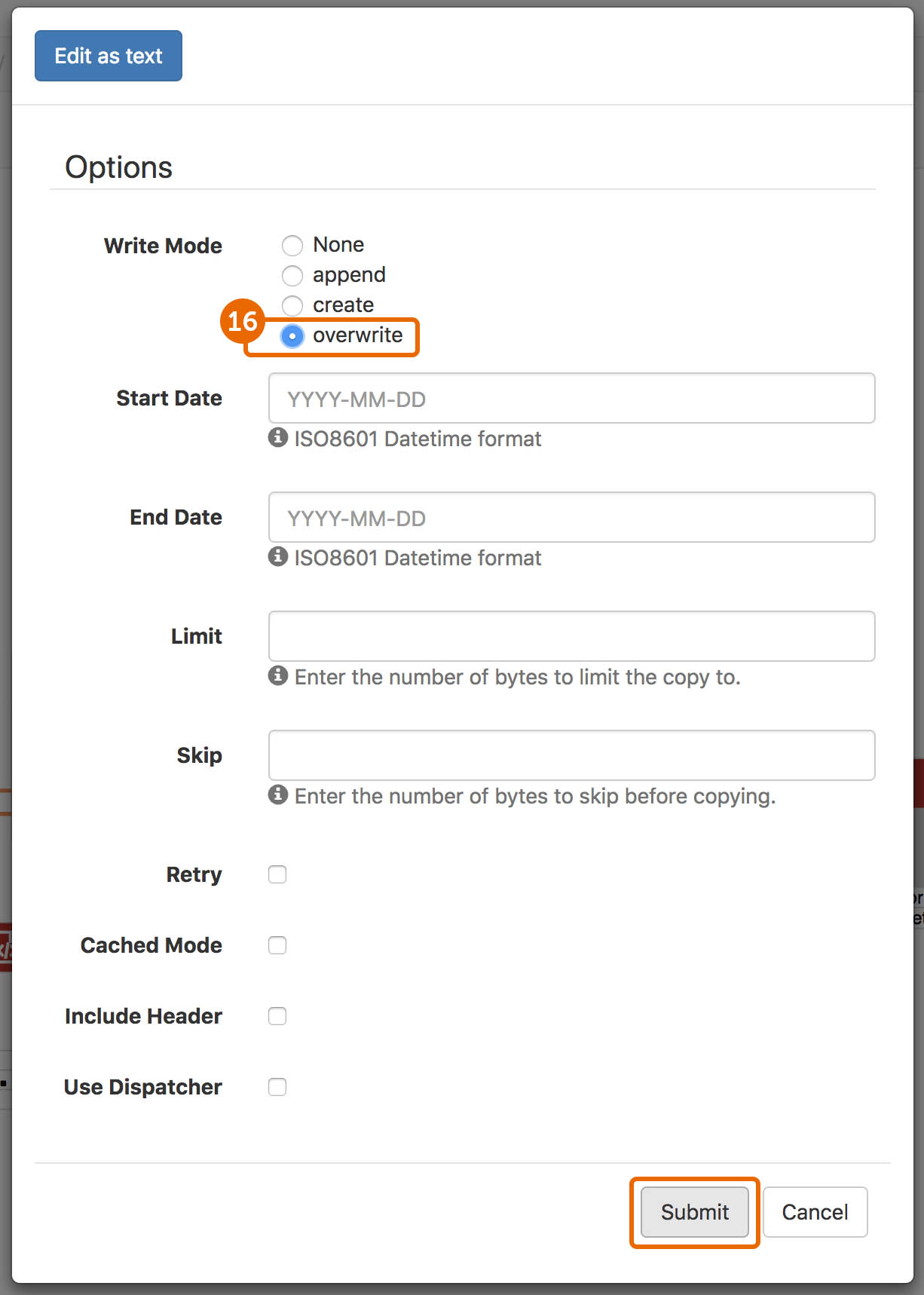

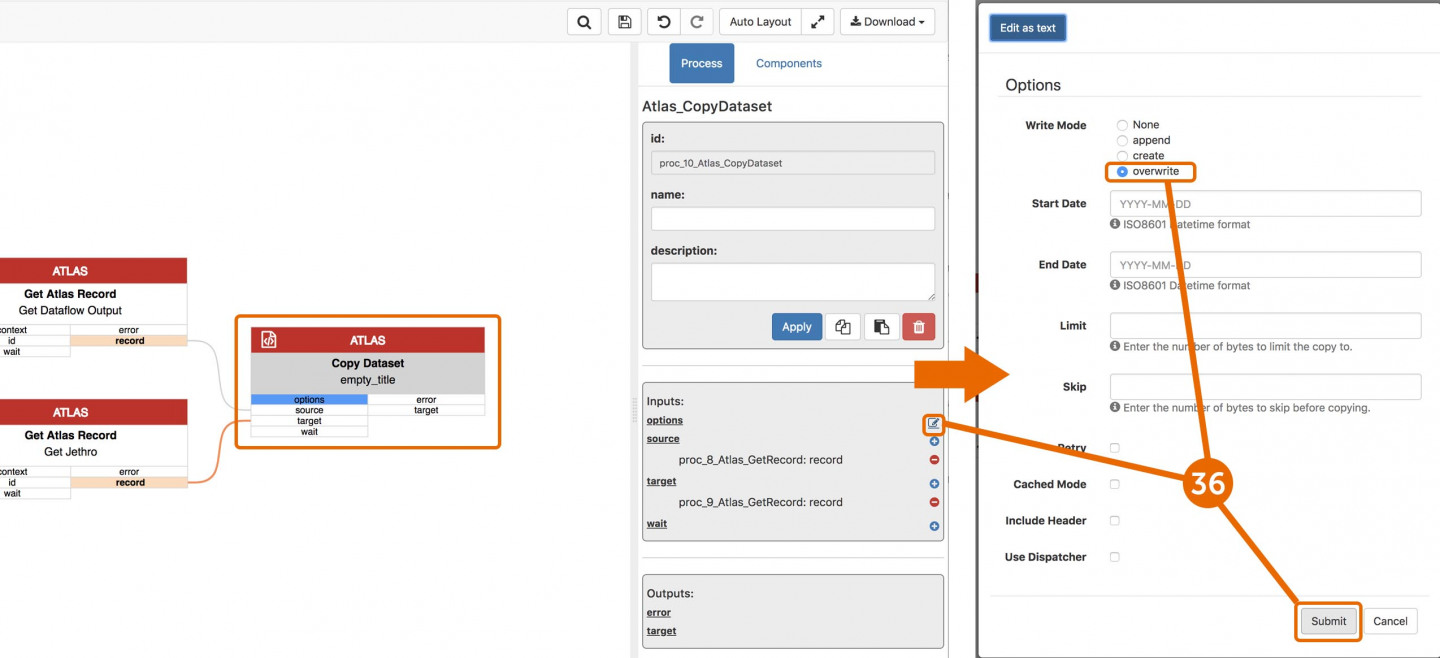

- On Copy Dataset, select options on the right side panel.

- Under Write Mode select overwrite and click submit. You could now run this workflow and it will move data from the source to target record crossing NFS to HDFS. If you don’t select this run mode, the workflow will error when the contents of the target dataset aren’t empty.

C) Set up a Dataflow to run within the Workflow

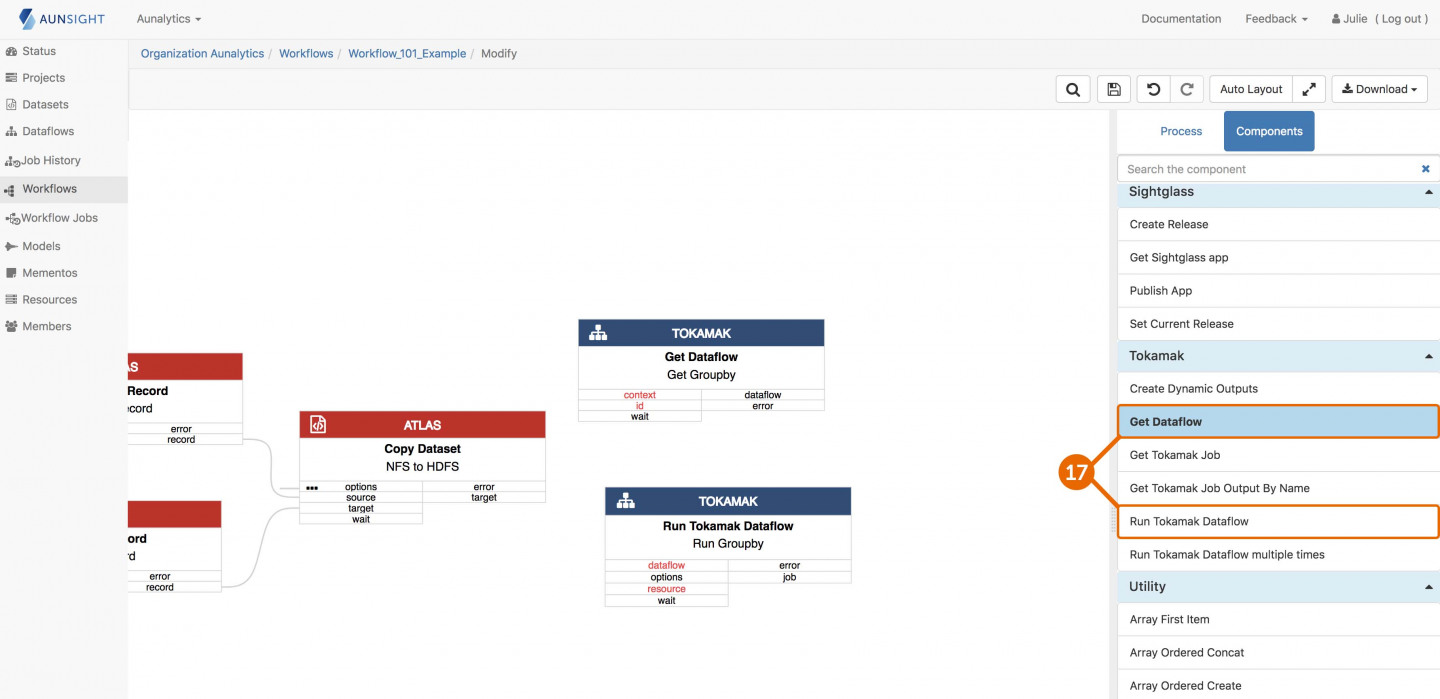

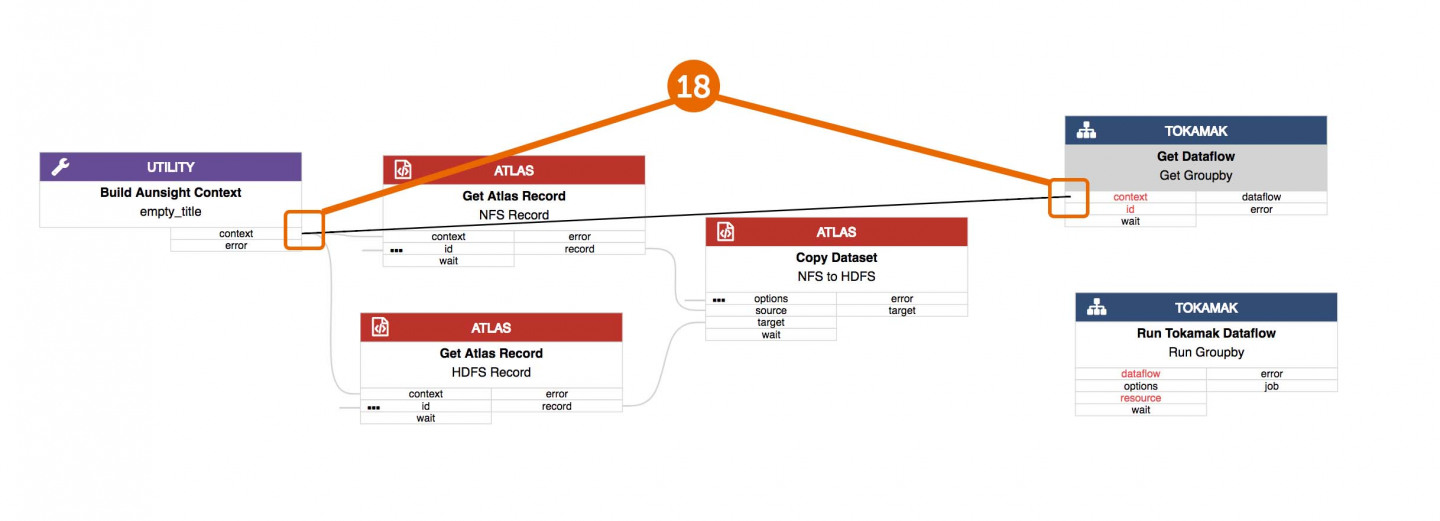

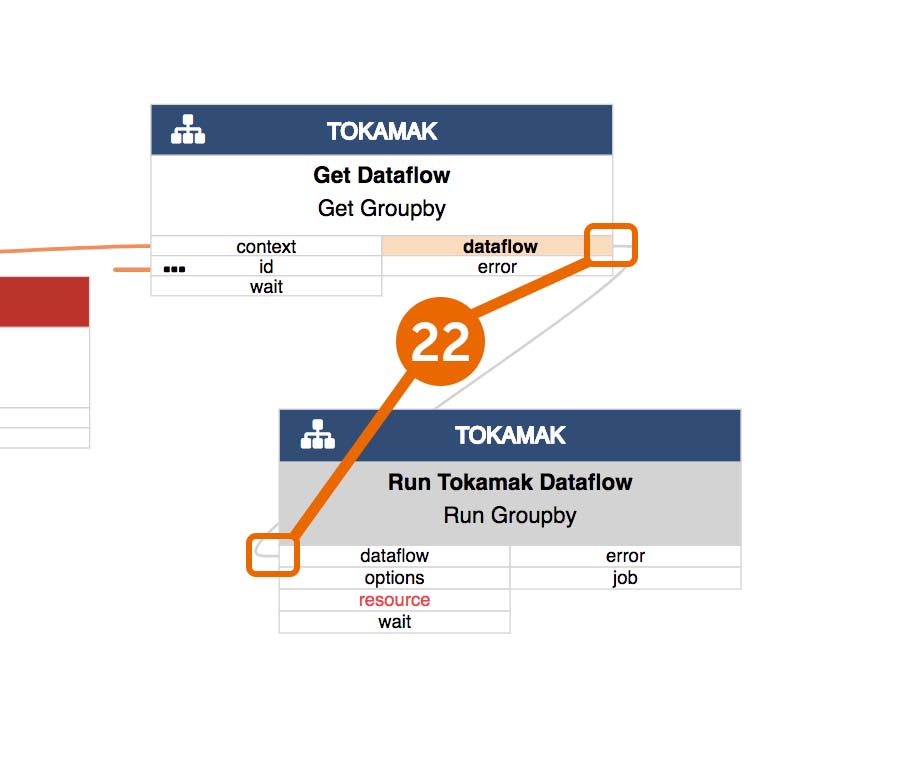

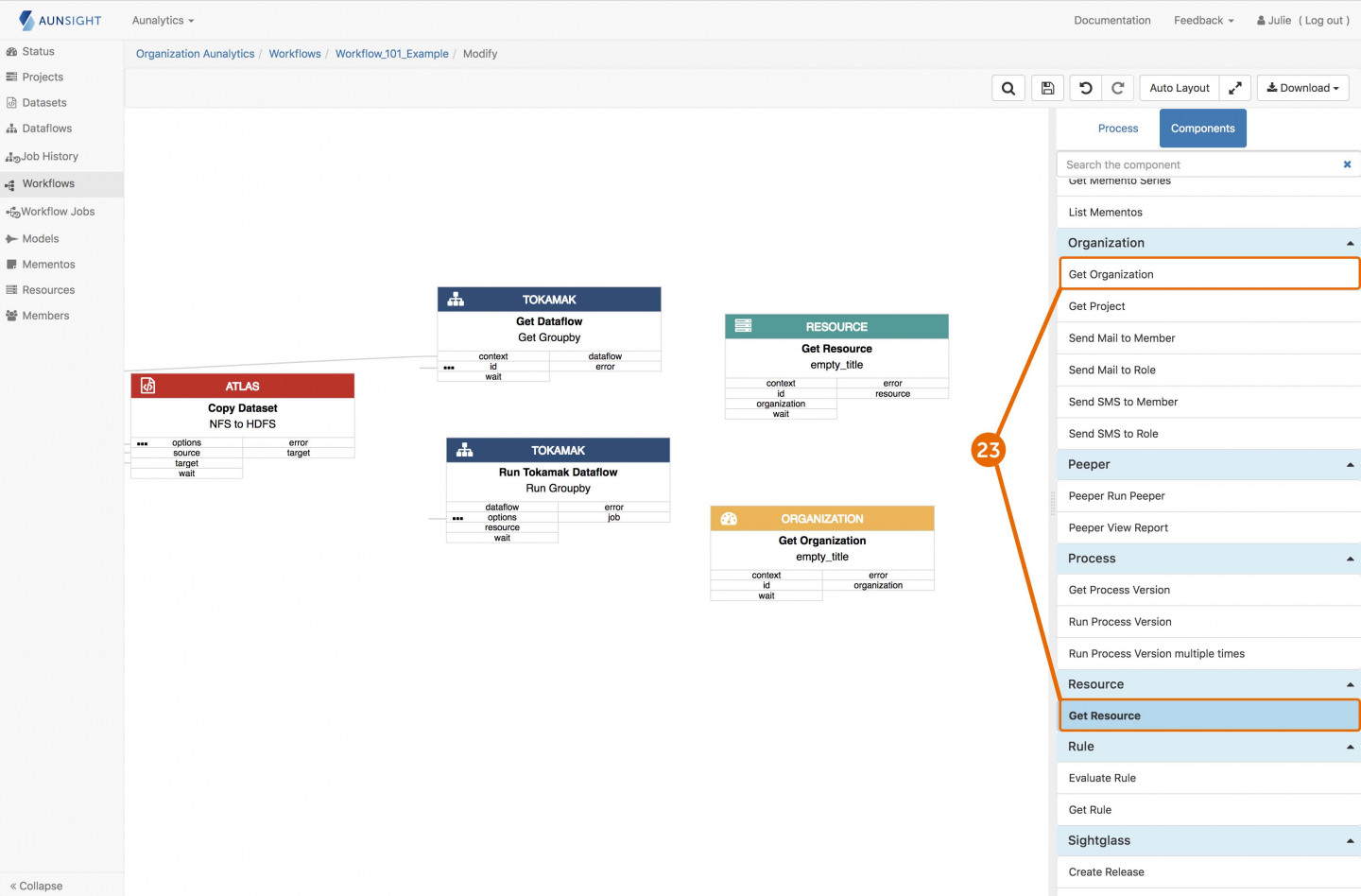

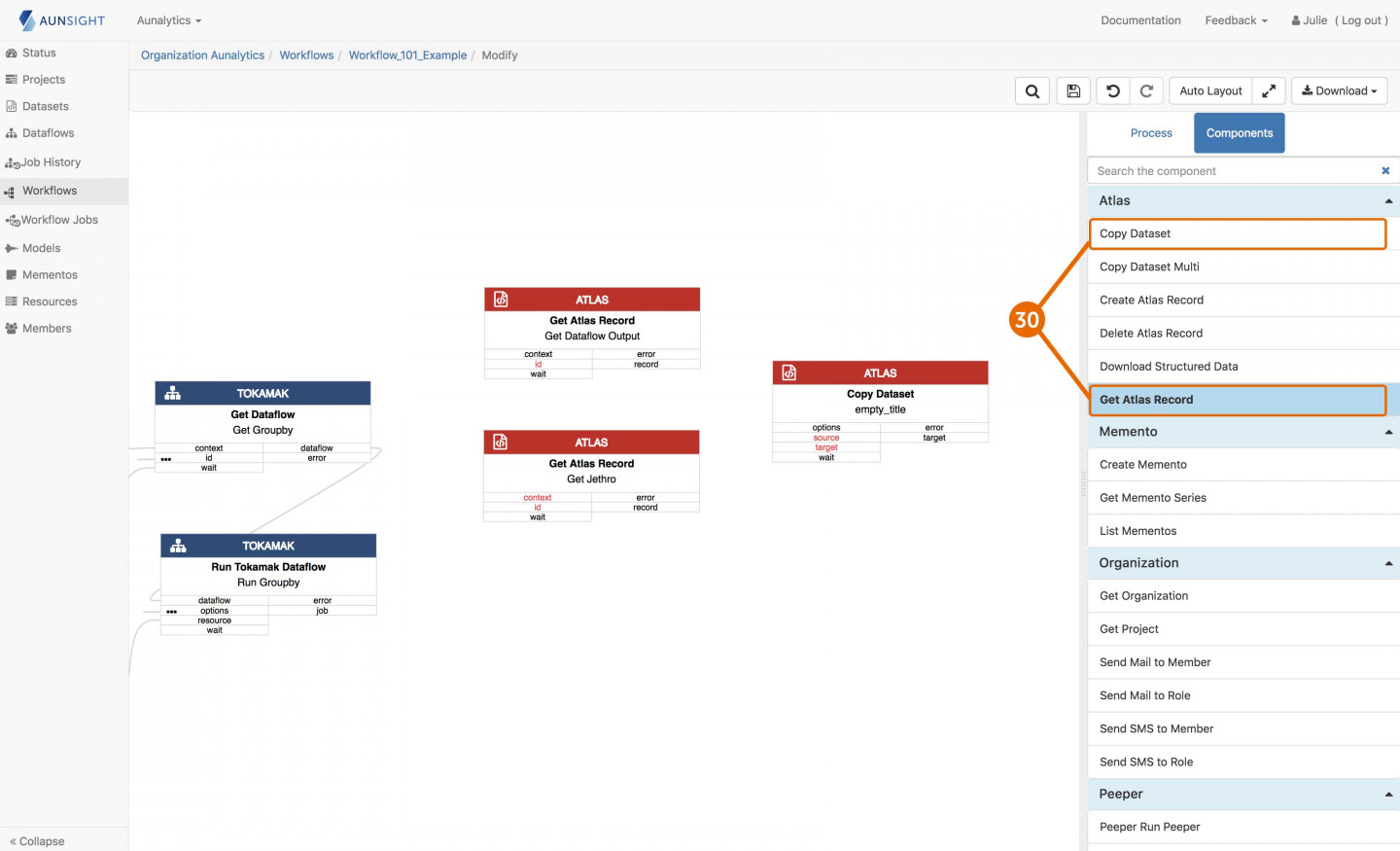

- On the right side panel, click Components and select Get Dataflow (name it Get Groupby) and Run Tokamak Dataflow (name it Run Groupby).

- On Get Dataflow, connect the context outbound port from Get Aunsight Context to the inbound context port.

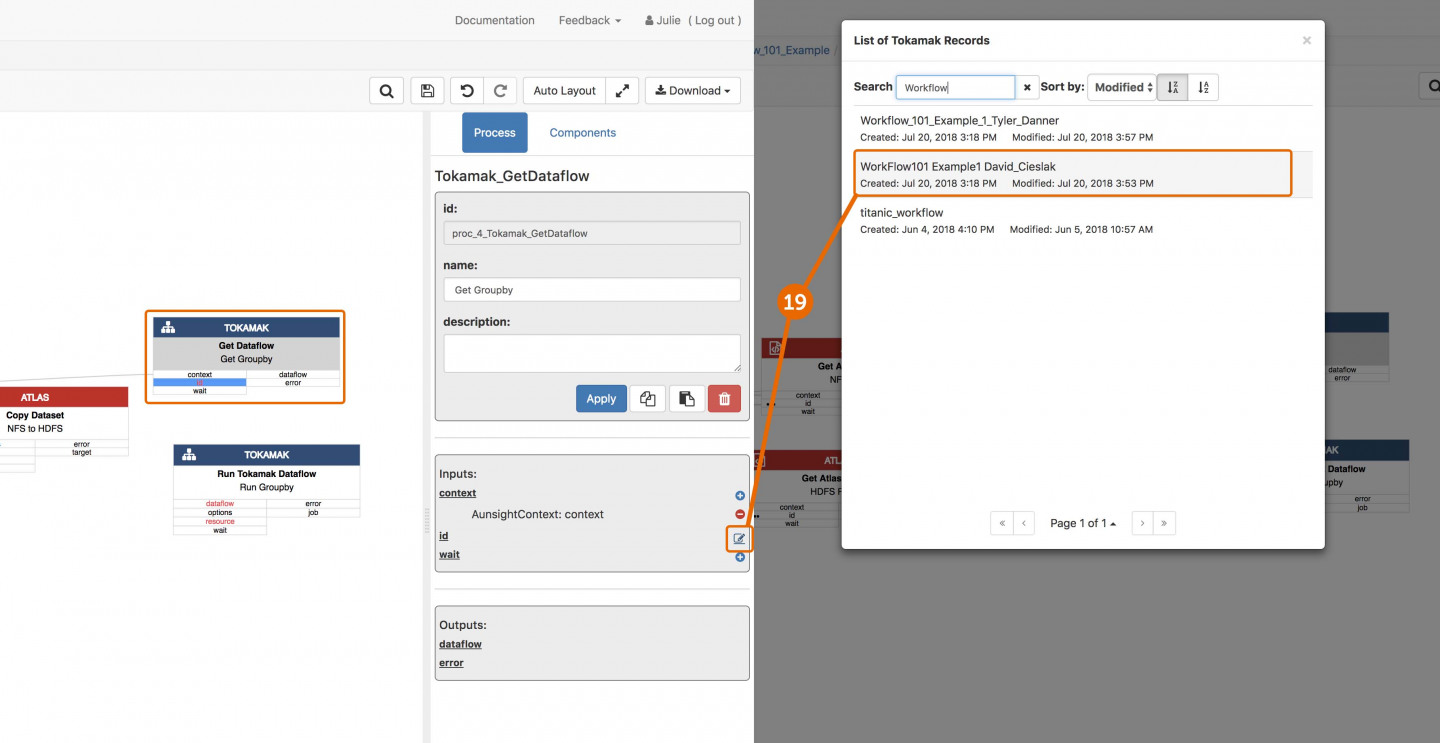

- Select Get Dataflow and open id on the right side panel. Select the Workflow101 Example1 dataflow which has been pre-built for you (or you can go to this tutorial to do it yourself).

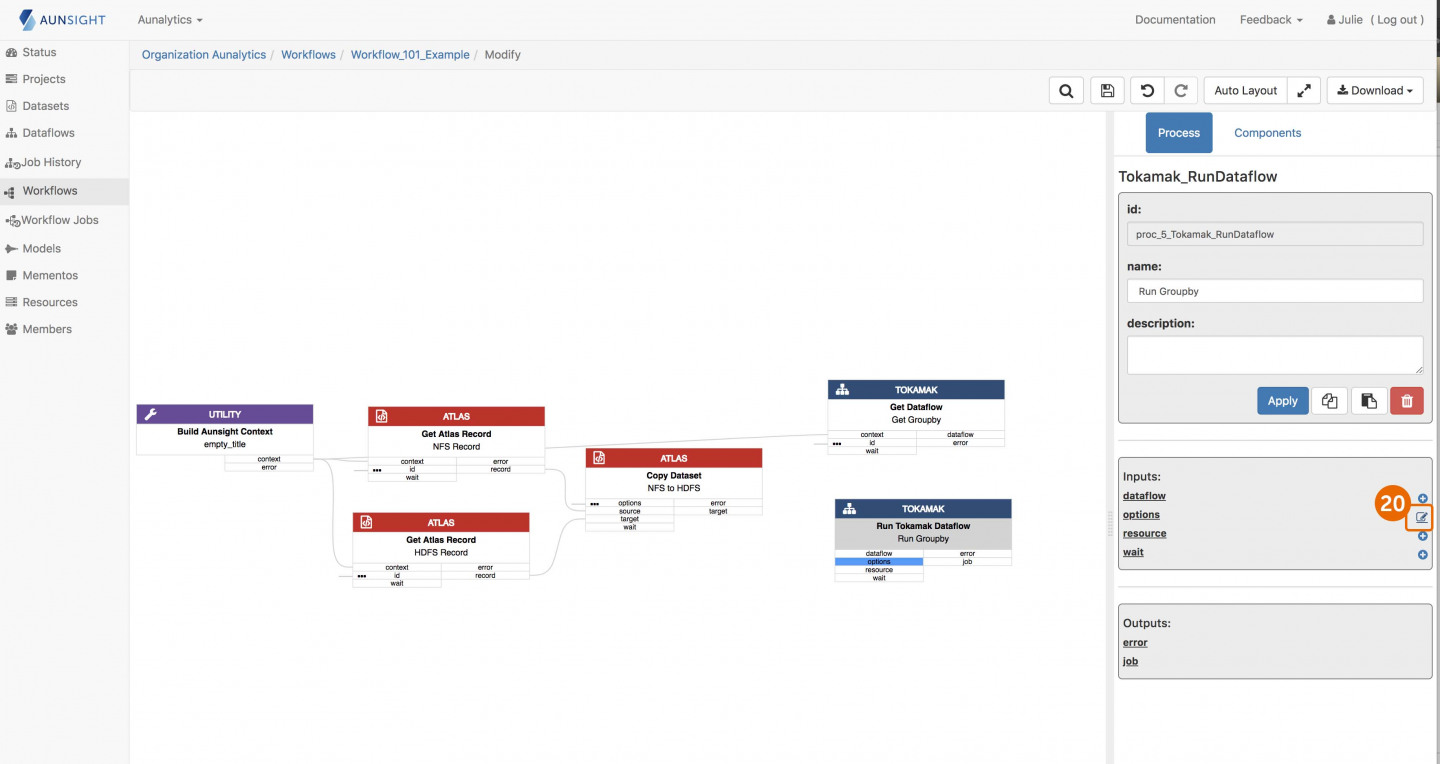

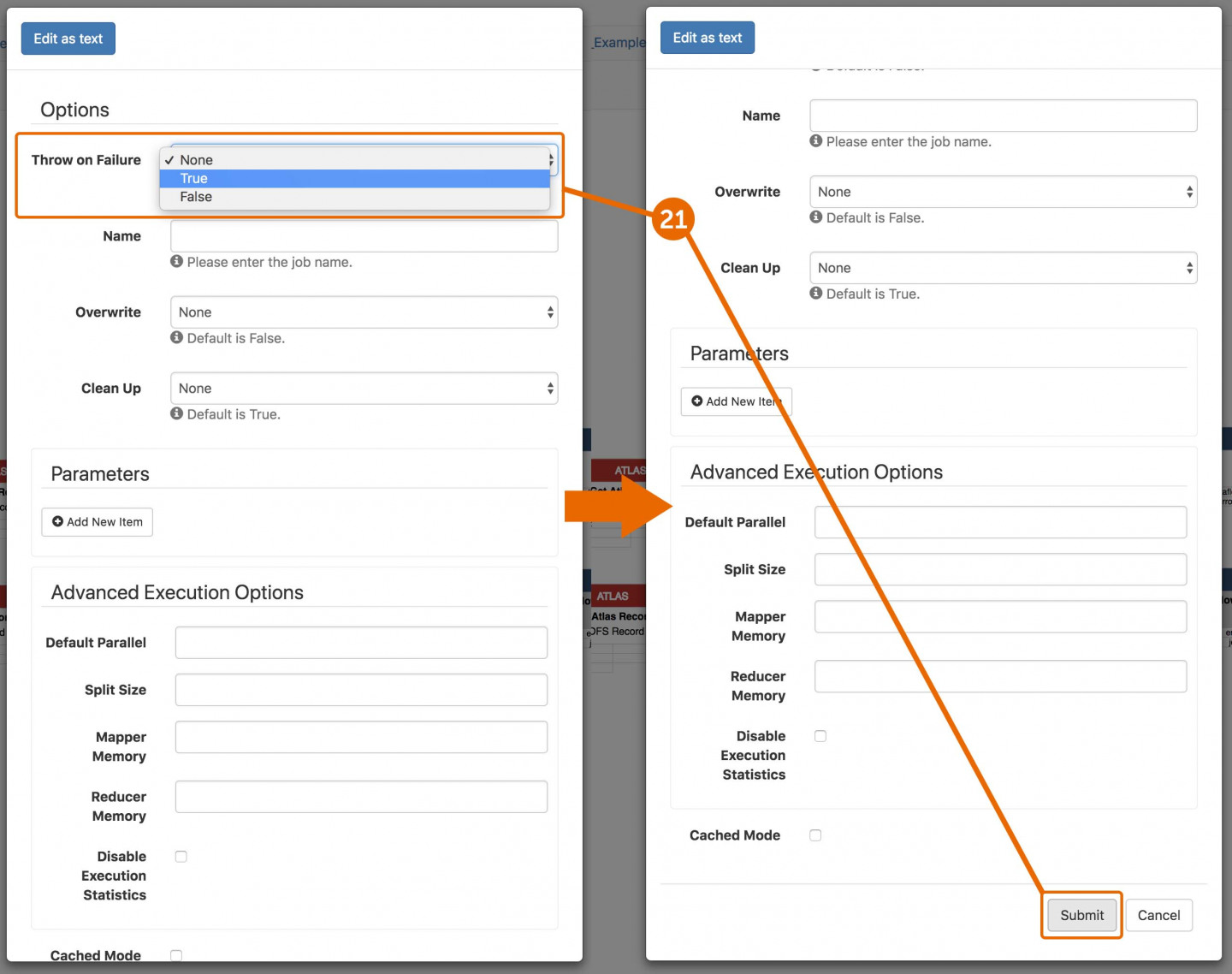

- On Run Dataflow, select Options from the right side panel.

- Set Throw on Failure to True and Overwrite to True and then click Submit.

- Connect the Get Dataflow dataflow outbound port to the Run Tokamak Dataflow dataflow inbound port.

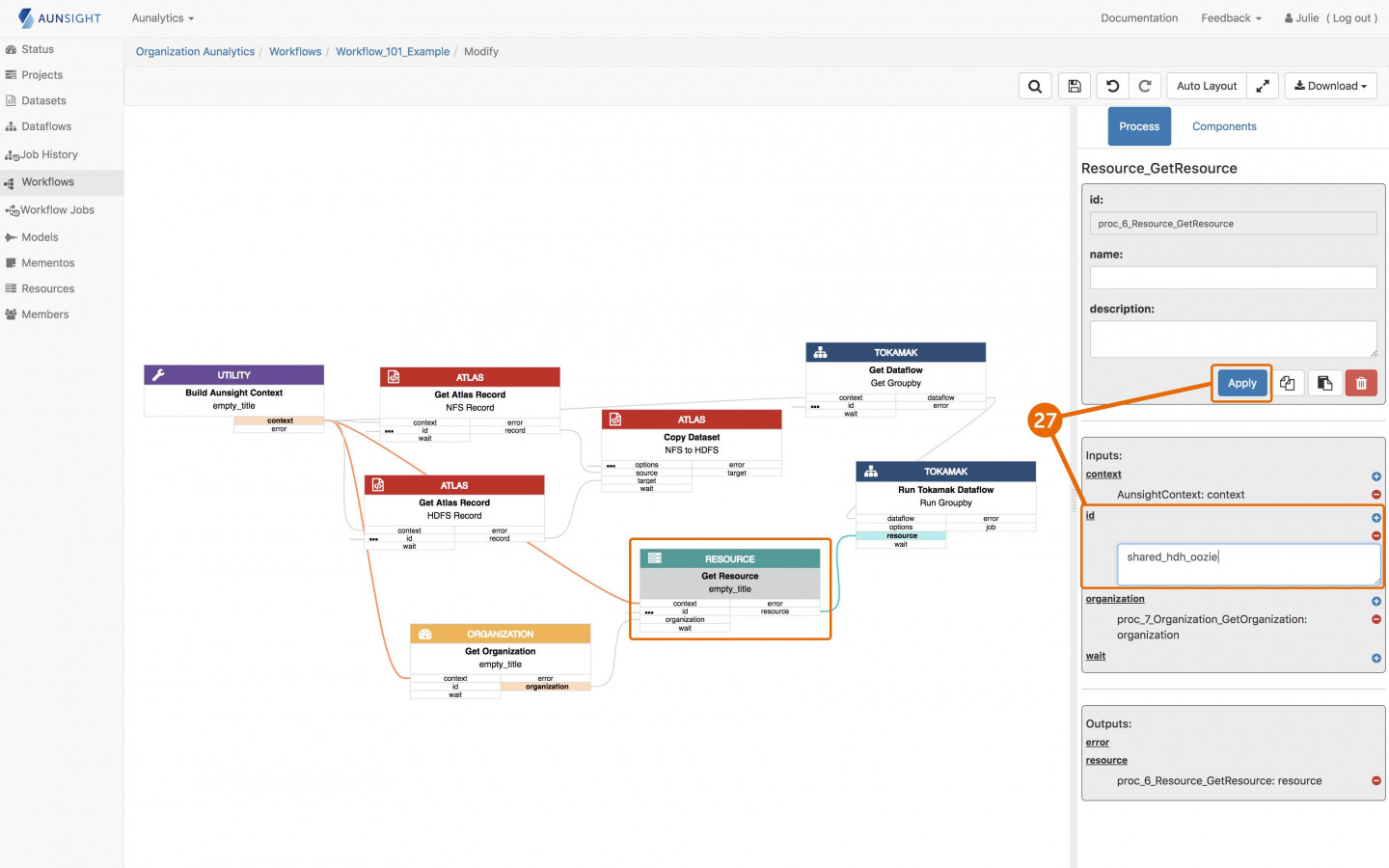

- From Components on the right sidebar, select Get Resource and Get Organization.

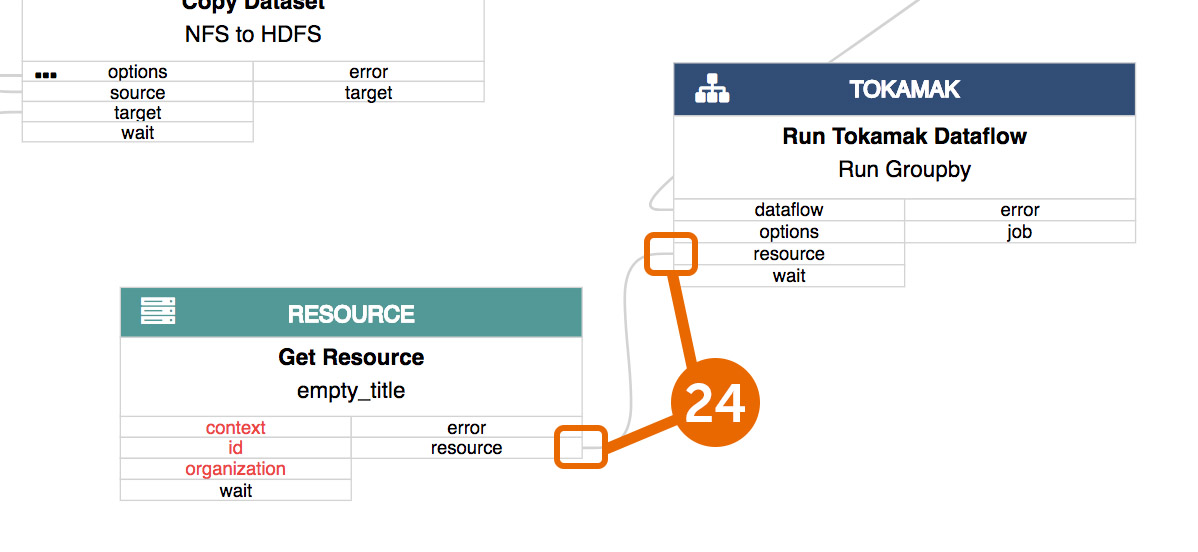

- Connect the resource outbound port on Get Resource to the resource inbound port on Run Tokamak Dataflow

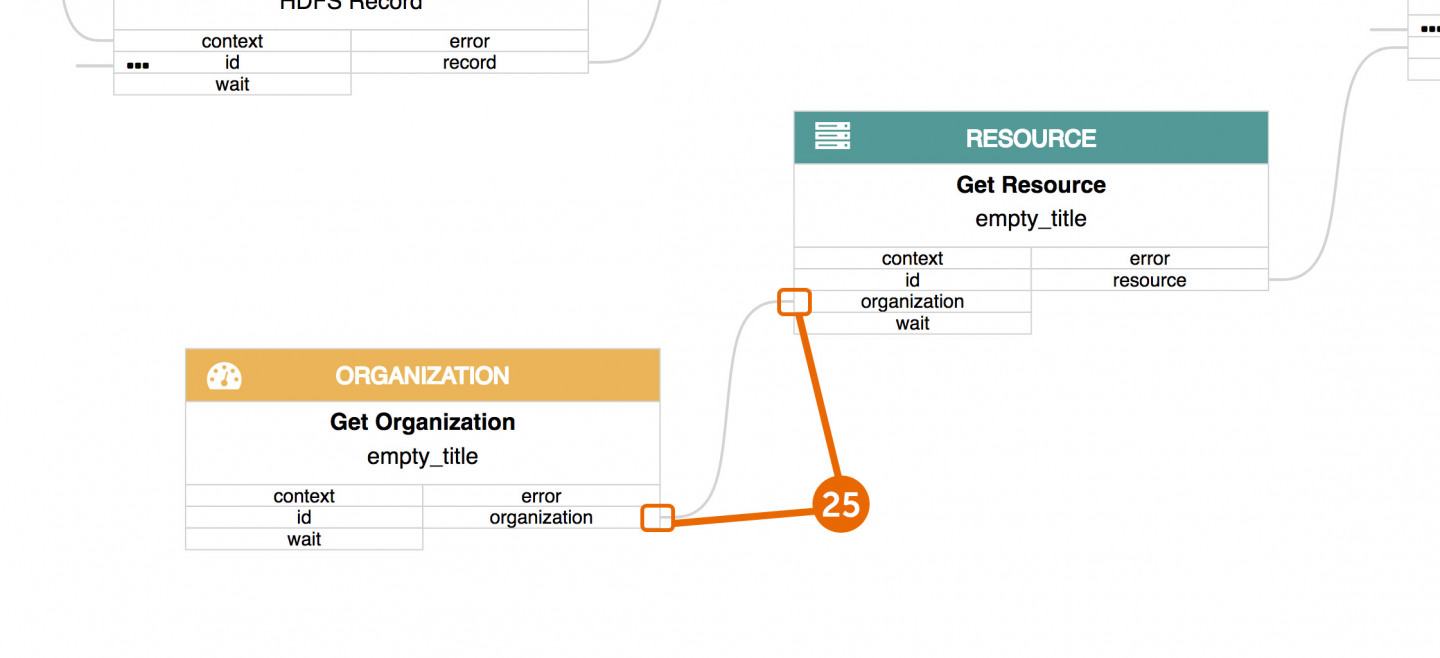

- Connect the organization outbound port on Get Organization to the organization inbound port on Get Resource

- Connect the context outbound port from Build Aunsight Context to the context inbound port on both Get Resource and Get Organization.

- On Get Resource, using the right side panel, enter shared_hdh_oozie in the id field.

NOTE: This is getting a reference to the Hadoop resource that will run the dataflow. This can be found on the resource tab and references the HDFS resource that your organization has setup. Contact our support if you need help determining what this is.

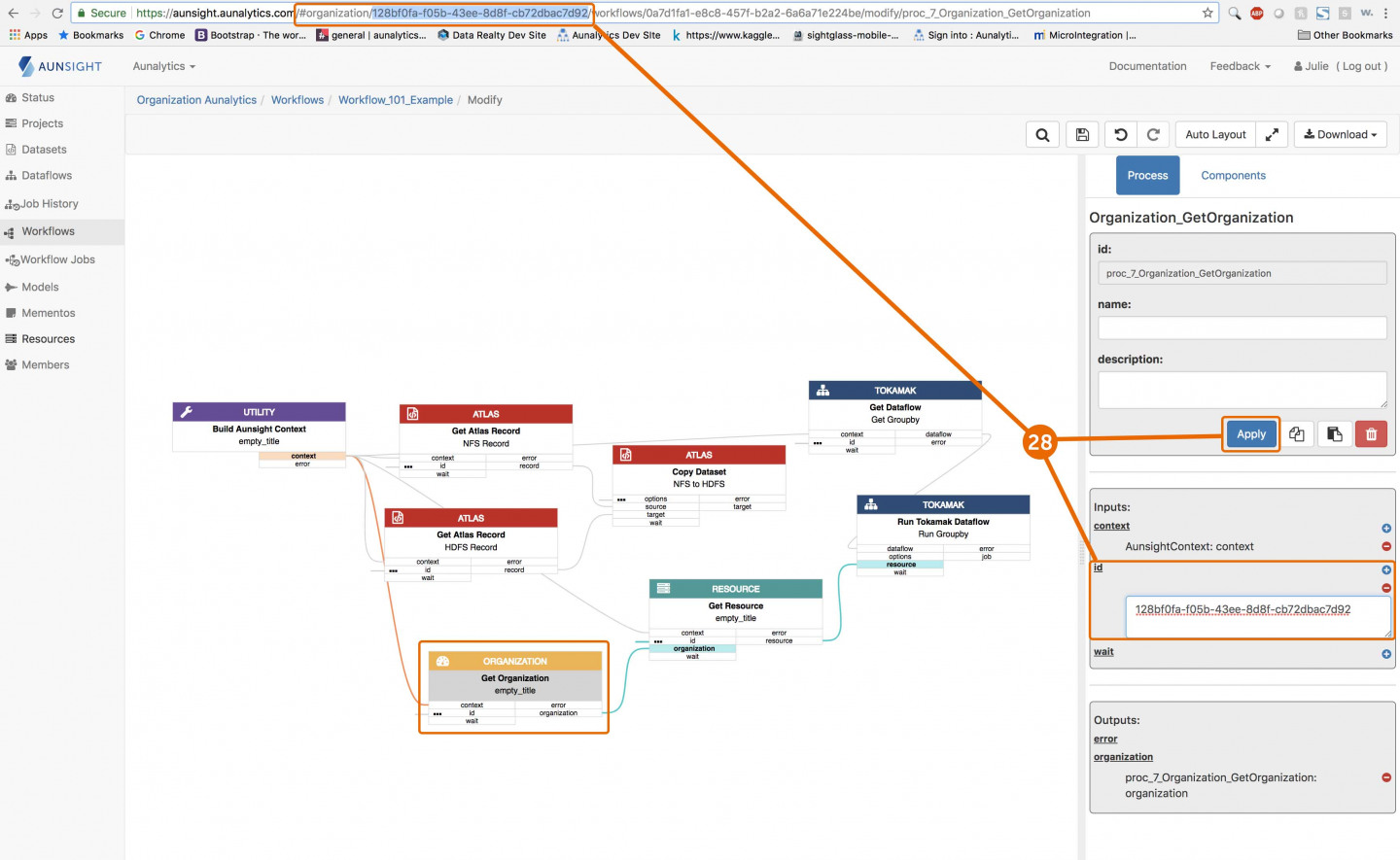

- On Get Organization, enter your organization’s UUID into the id field. The UUID appears in the URL string following “#organization” and can be available from your system administrator or Aunalytics support.

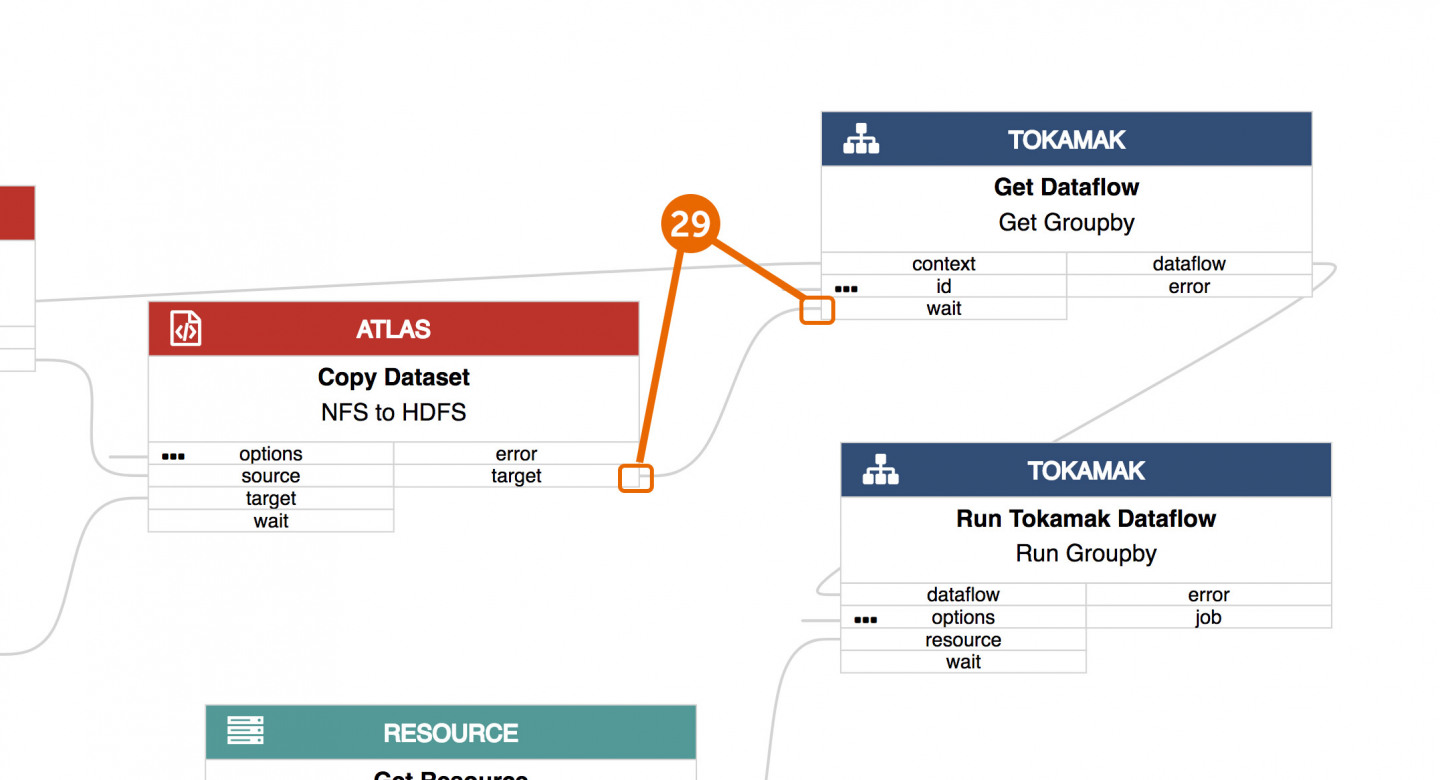

- Connect the target outbound port on Copy Dataset to the wait inbound port on Get Dataflow. This creates a dependency for the copy to happen prior to the execution of the dataflow. You can read more about our workflow execution model in the Aunsight documentation.

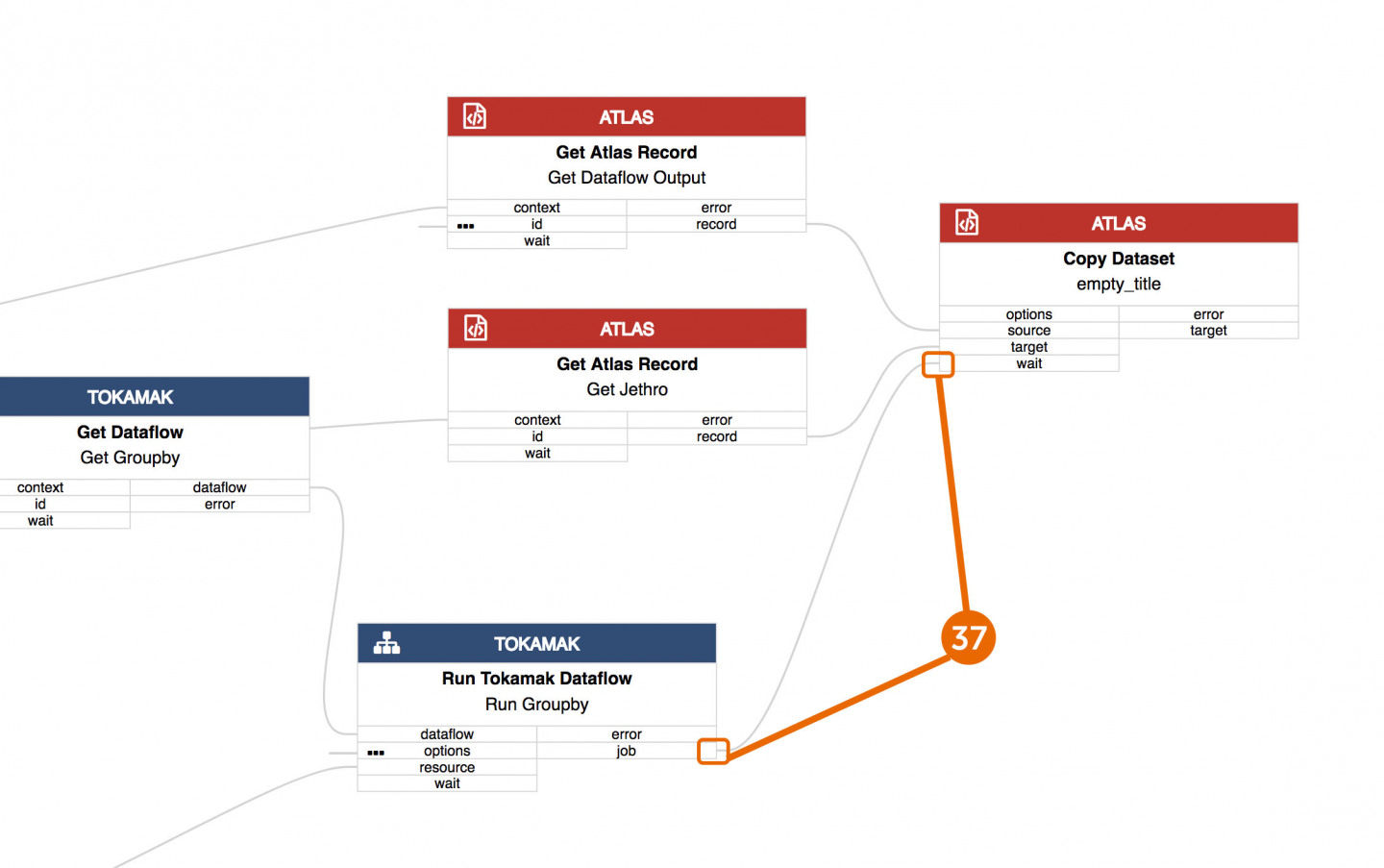

D) Publish data to accelerator

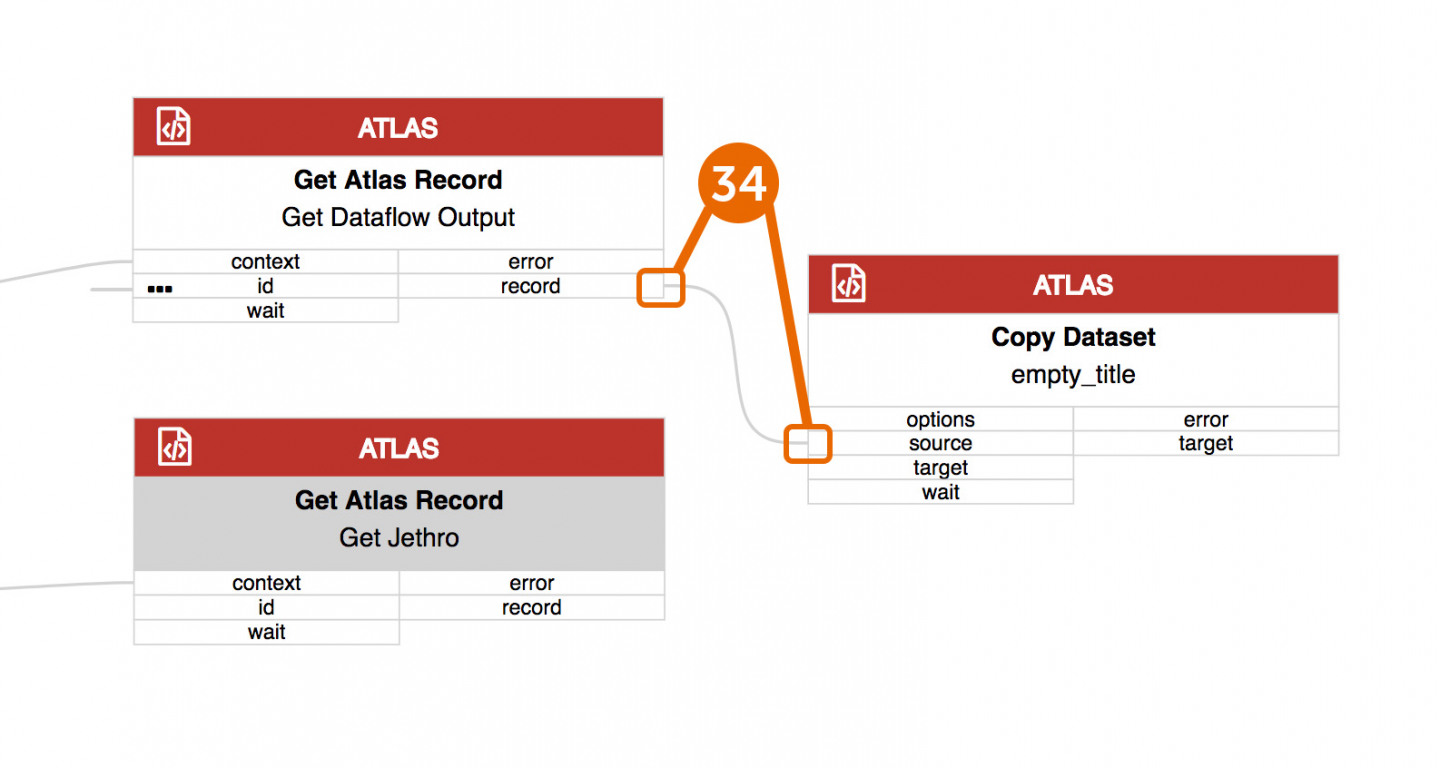

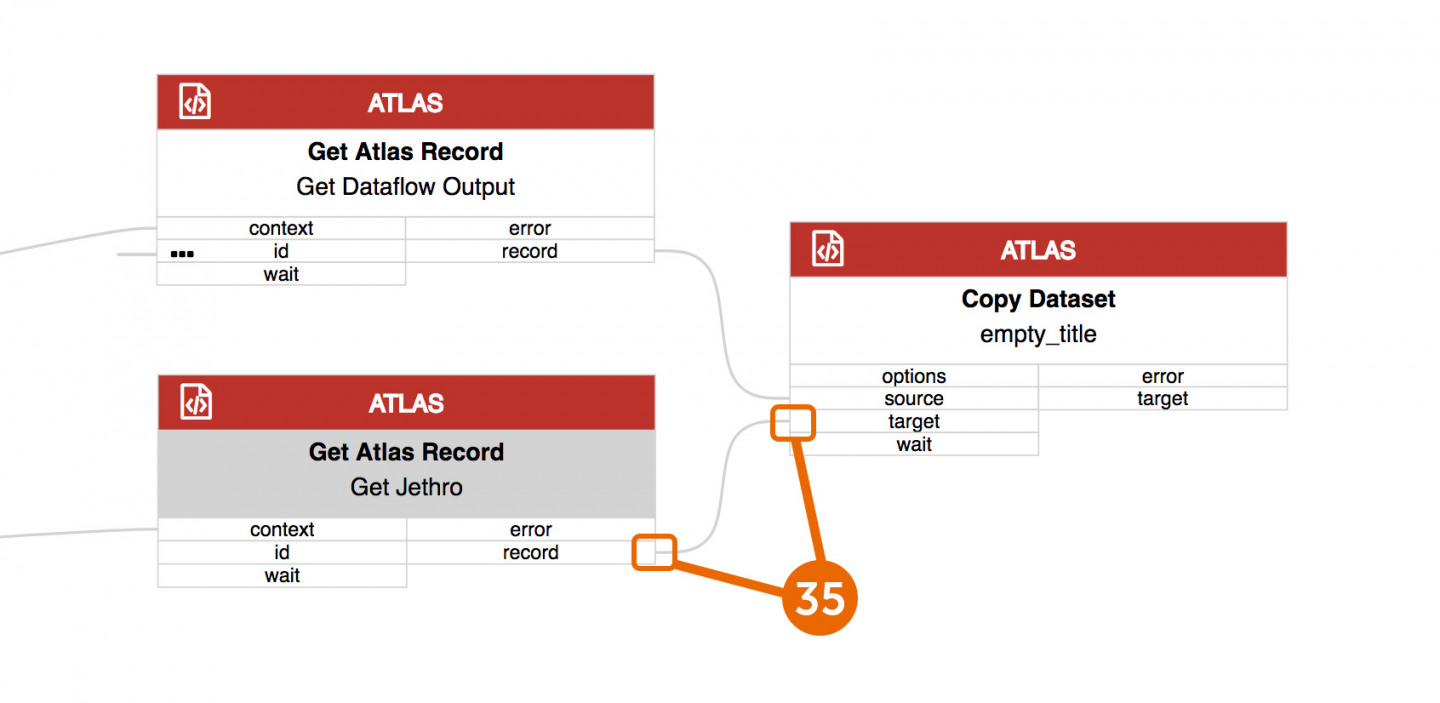

- Create two more Get Atlas Record components and a Copy Dataset component. Rename one of the Get Atlas Record components Get Dataflow Output, and the other Get Jethro.

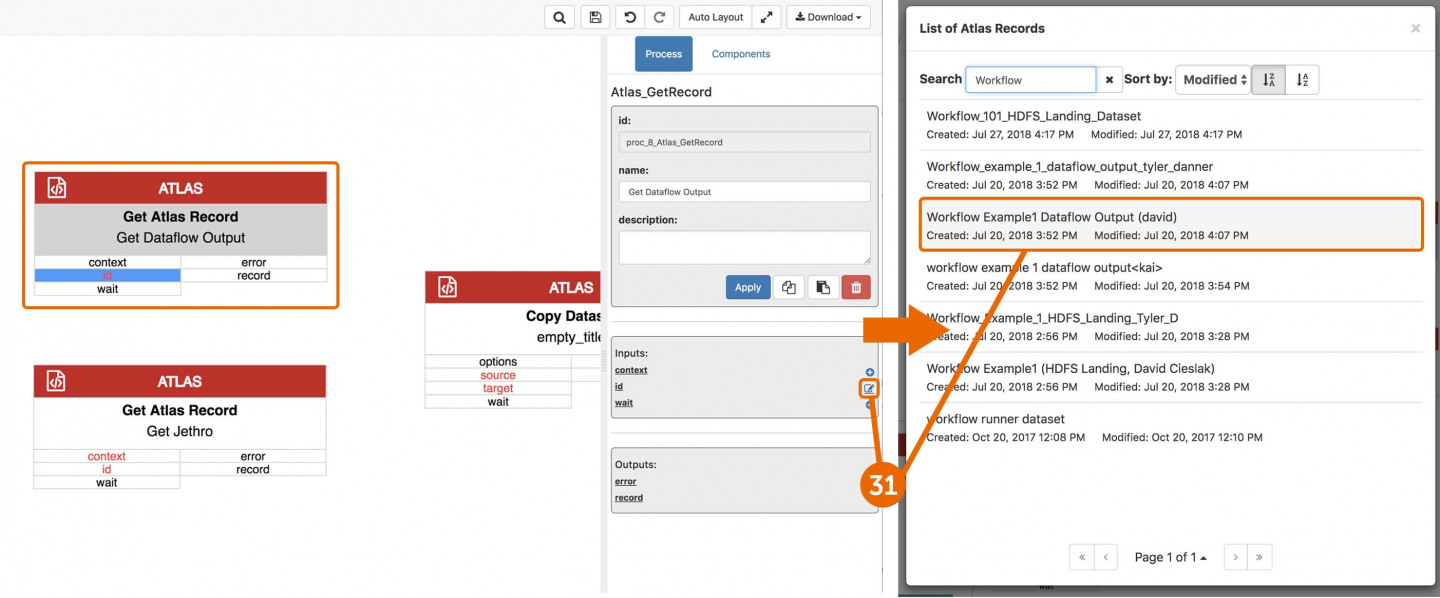

- On the Get Dataflow output component, edit the id and select Workflow Example Dataflow Output. (To learn how to create an empty dataset, see this section of the Dataset Creation tutorial.

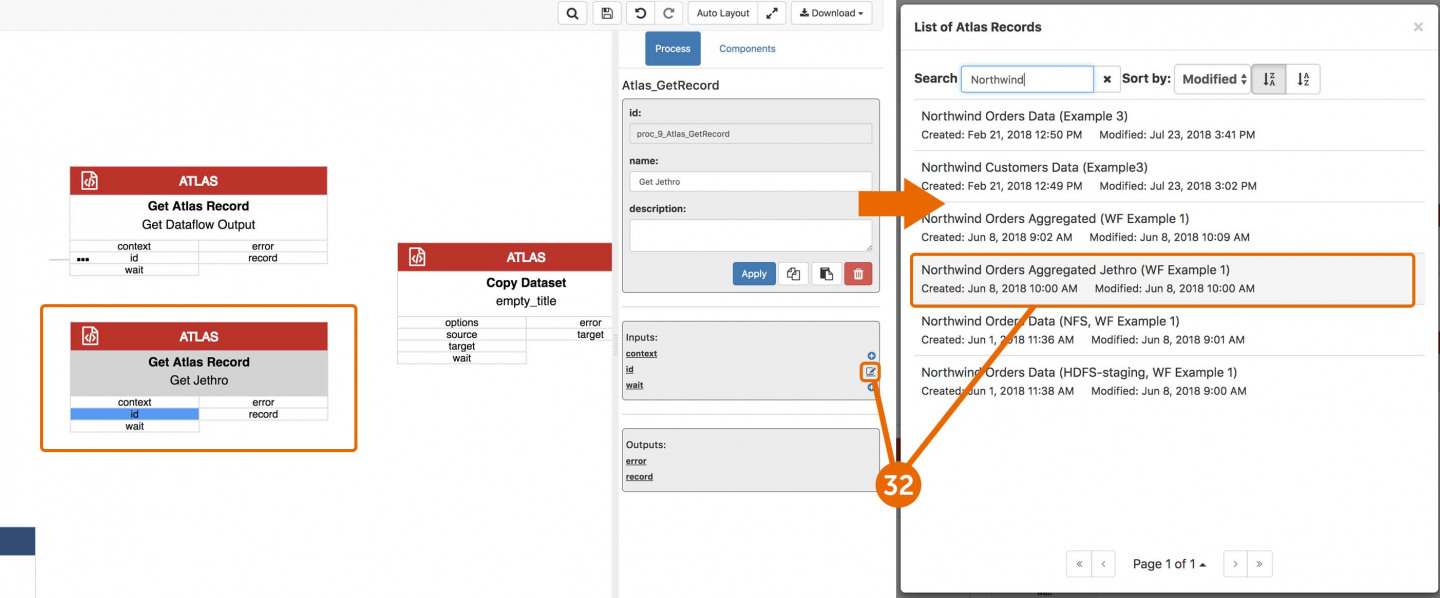

- On the Get Jethro component, edit the id and select Northwind Orders Aggregated Jethro.

- Connect the context outbound port from Build Aunsight Text to both Get Atlas Record inbound context ports.

- Connect the Record outbound port from Get Dataflow output component to the source inbound port on the last copy dataset.

- Connect the Record outbound port form Get Jethro component to the target inbound port on the last copy dataset

- On the last Copy dataset, select options and set Write mode to overwrite and click submit.

- Connect the Run Groupby job outbound port to the wait inbound port on the last copy dataset.

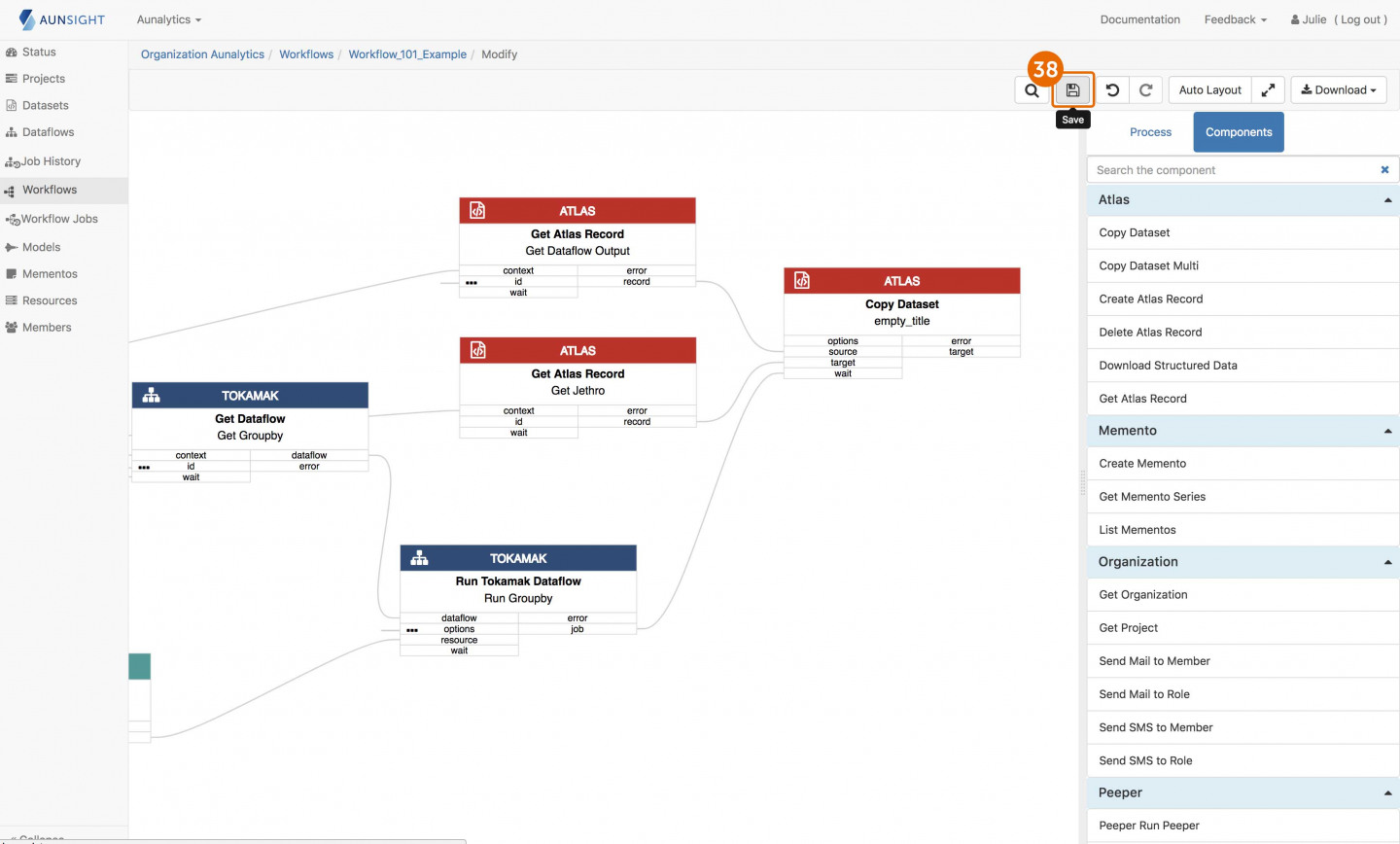

- Click save.

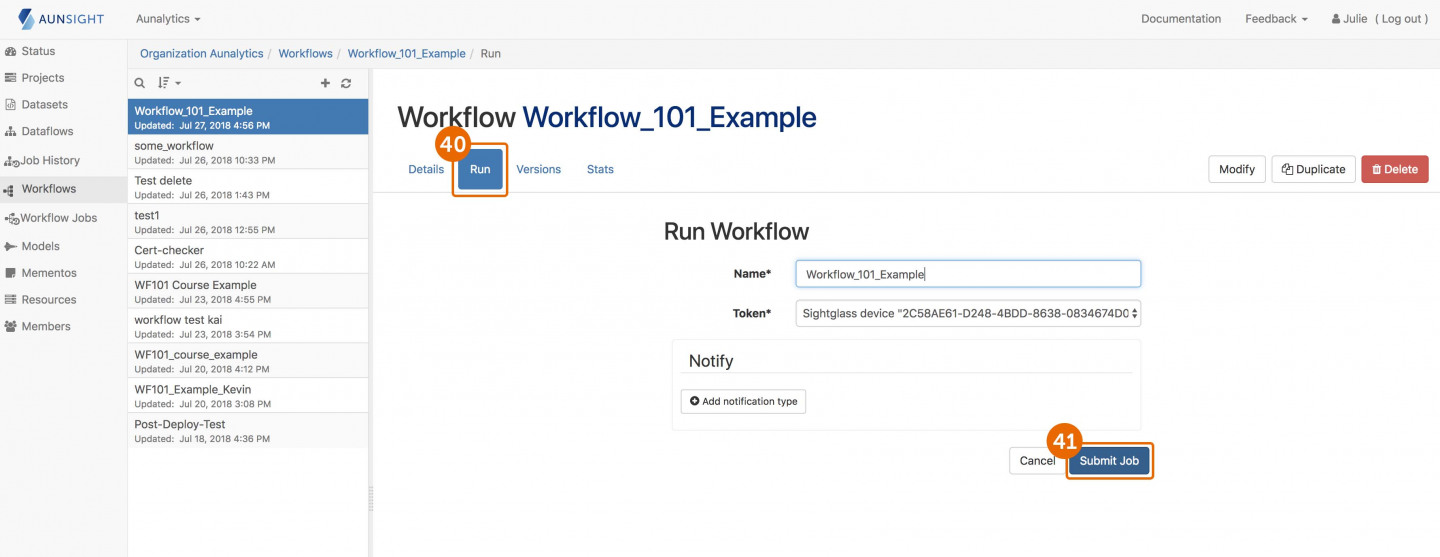

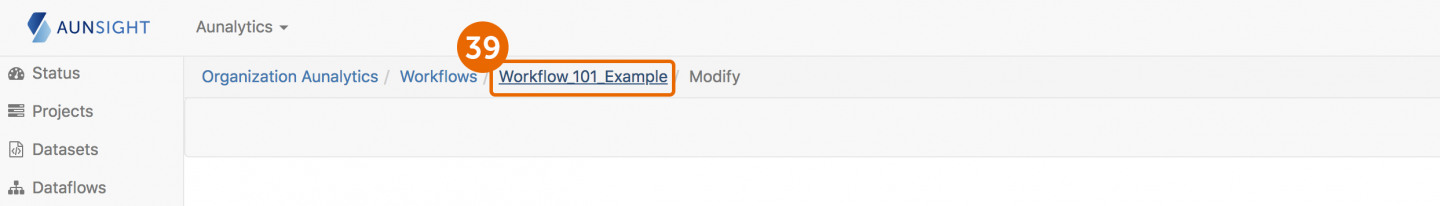

- Return to the workflow page.

- Click run.

- Click submit job.

Congrats; your job is now running!